Why Animations Need Bespoke QA?

Animations are the most deceptive part of modern interfaces.

They often look “almost right” while still being broken in subtle but critical ways:

- shifting by 1–2 pixels,

- playing with incorrect easing,

- stuttering on weaker devices,

- dropping frames under load,

- desynchronizing from async data.

Most of these defects remain undetected by standard automation and are often overlooked in visual reviews.

In static UI, a 1-px offset is a defect.

In motion, it gives rise to a vague feeling that “something is off.” These are the most dangerous defects because they:

- erode user trust subconsciously,

- are difficult to reproduce,

- silently reach production.

This creates a paradox:

The more visually sophisticated the interface, the higher the risk of hidden motion regressions.

That is why animations require a dedicated QA strategy, not a checkbox inside general UI testing.

Setup: Core Infrastructure for Animation QA

Before discussing tools and tests, the environment must be set up correctly. Without proper setup, animation QA becomes unreliable by definition.

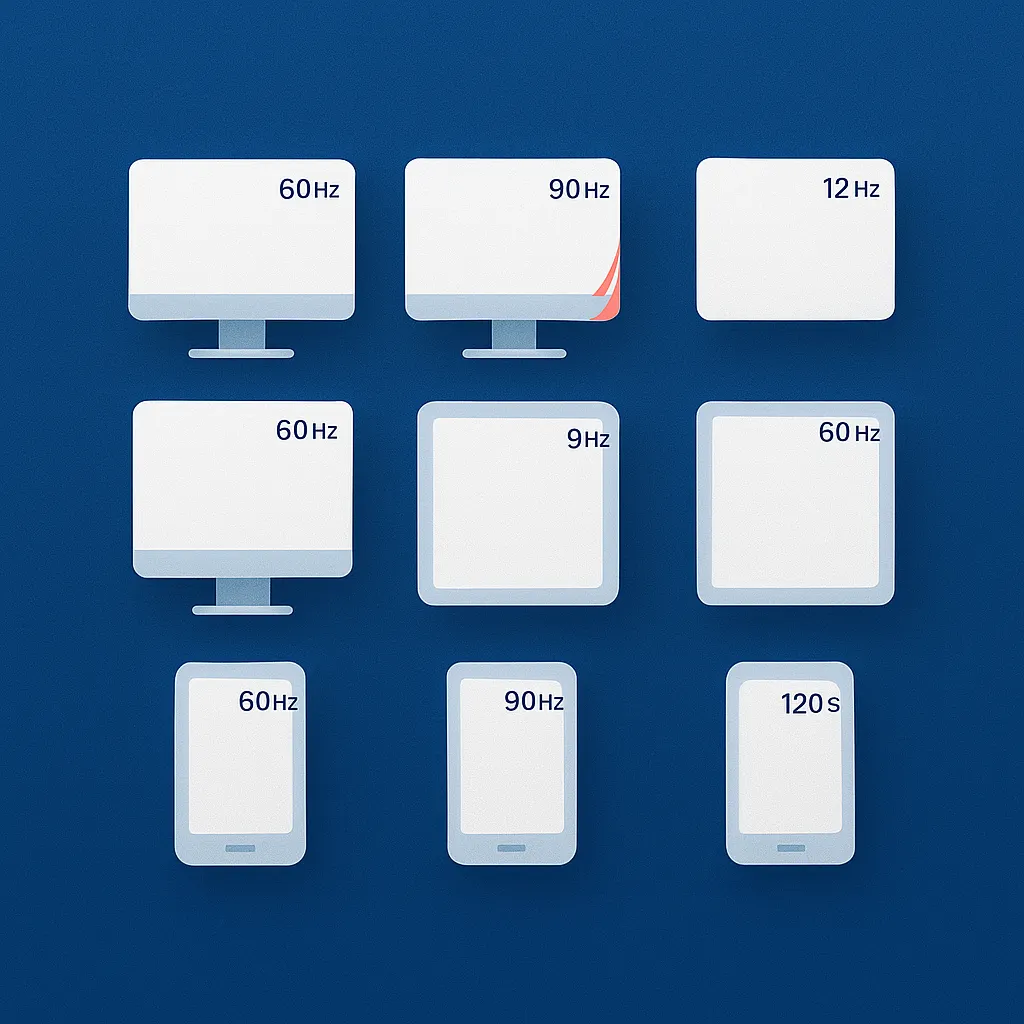

1. Device Lab: mandatory for motion testing

If animations are tested only:

- on one MacBook,

- in one browser,

- on a 120-Hz display,

Then you are not testing animations — you are testing a single perception profile.

A realistic baseline lab includes:

Desktop

- Chrome

- Safari

- Firefox

Mobile

- iOS Safari

- Android Chrome

Refresh rates

- 60 Hz

- 90–120 Hz (for Pro-grade devices)

Why this matters:

- different refresh rates change frame intervals,

- different rendering engines apply different compositing rules,

- GPUs affect frame stability and scheduling.

An animation that feels “premium” at 120 Hz can visibly degrade at 60 Hz.

If QA does not observe this delta, it becomes invisible to the team.

2. Deterministic playback: the non-negotiable requirement

The core problem in animation QA is that animations are inherently non-deterministic:

- timing depends on CPU load,

- FPS fluctuates,

- async data affects trigger order,

- network conditions shift start time.

Therefore, the first engineering requirement is absolute:

Every animation must be able to run deterministically.

In practice, this means:

- mocked API responses,

- disabled live network,

- synchronized triggers,

- fixed zero-time origin (

t = 0).

Without deterministic playback, true regression testing for motion does not exist.

3. Frame-based validation instead of static screenshots

Traditional visual regression relies on a single screenshot.

For animations, this approach is functionally useless.

The correct model is frame sequencing:

- capture 30–120 frames,

- at a fixed interval (e.g., every 16 ms),

- always at identical time offsets.

Each frame becomes:

- a comparison artifact,

- a measurable data point,

- a legally defensible piece of evidence in a regression report.

This transforms animation from “subjective feel” into a quantifiable system under test.

Tools & Techniques: How Animations Become Testable

A production-compatible minimum stack for animation QA looks like this:

- Playwright — browser control and scripting

- ffmpeg — PNG stack → video assembly

- pixelmatch — pixel-level frame diffing

- Chrome DevTools Performance API — timing and long-task telemetry

- Web Animations API — motion state inspection

This removes theoretical abstraction and makes the system operational.

1. Real production-grade frame capture

This is a minimal, reproducible implementation:

const page = await browser.newPage();await page.goto(url, { waitUntil: "networkidle" }); // deterministic test modeawait page.addInitScript(() => { window.__TEST_MODE__ = true;}); // force animation startawait page.evaluate(() => { document.querySelector('[data-anim="hero"]') .dispatchEvent(new Event("force-start"));}); // strict frame capture at 60 fpsfor (let i = 0; i < 90; i++) { await page.waitForTimeout(16); await page.screenshot({ path: `frames/frame_${i}.png` });}This enables:

- pixel-level diffing,

- sub-frame jitter detection,

- easing distortions,

- timing regressions.

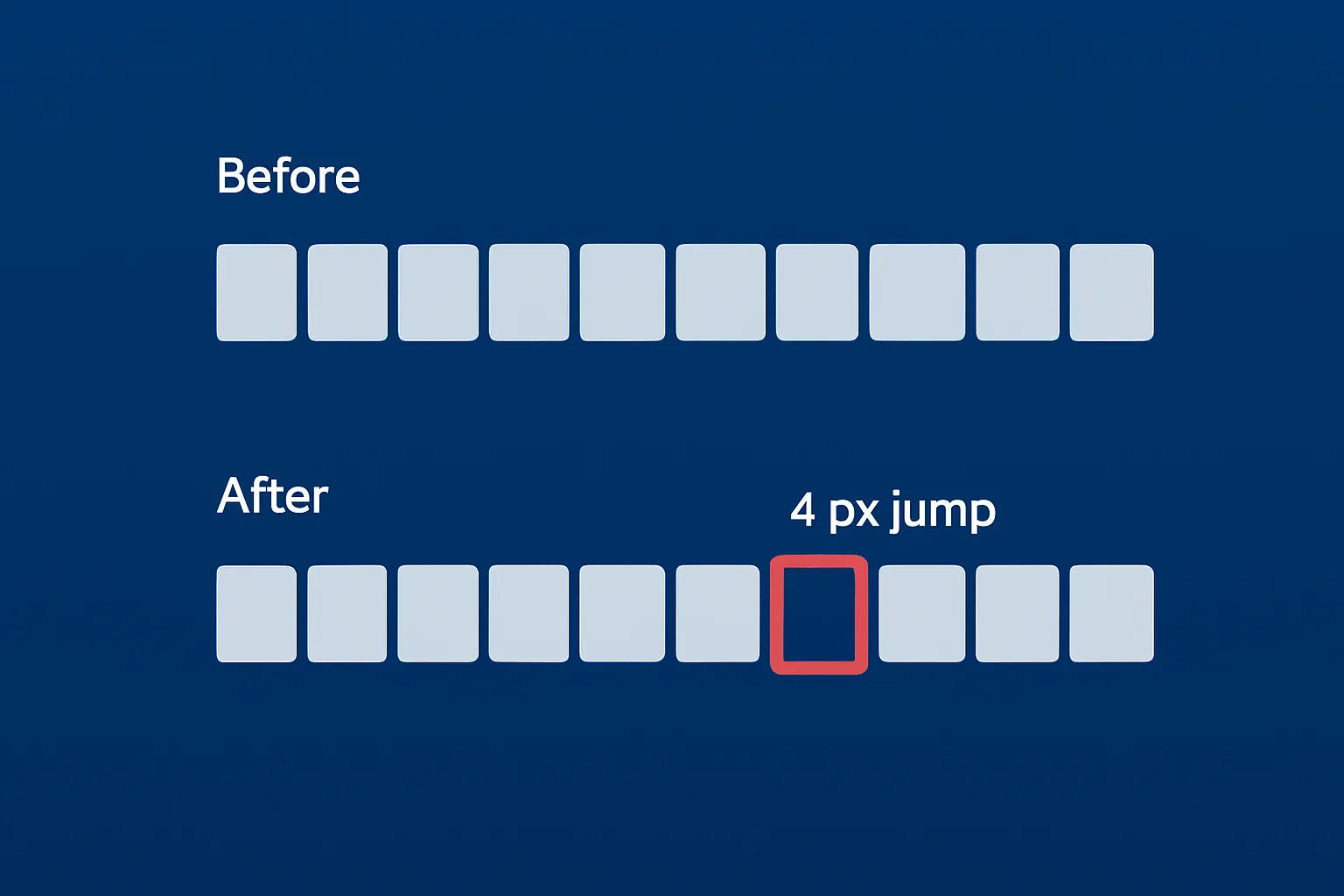

Subjective arguments are replaced with objective proof:

“On frame 18, transformX deviates by 4 px.”

2. Web animations API as a testing interface

The Web Animations API exposes precisely what QA needs:

const anim = element.getAnimations()[0];console.log(anim.currentTime, anim.playState);This allows QA to:

- validate real runtime timing,

- pause motion at exact states,

- verify multi-element synchronization,

- detect drift between nominal and real easing.

At this point, animation stops being “purely visual” and becomes verifiable as data.

3. Motion curves are contracts, not preferences

Two animations can:

- start and end at the same positions,

- yet feel completely different.

The difference is almost always easing.

From a QA perspective:

If the easing curve changes unintentionally, it is a regression.

Overshoot removal, shortened deceleration, flattened acceleration — all degrade perceived quality even if endpoints match.

4. Design as an executable motion contract

High-fidelity animation QA requires extracting behavioral contracts from design.

Motion designers define:

- timestamps,

- easing functions,

- transform and opacity targets.

These become machine-readable specifications:

{ "t": 240, "opacity": 1, "x": 0, "easing": "cubic-bezier(0.2, 0.9, 0.3, 1)"}The QA system compares runtime telemetry vs. the design signature. At this point, design becomes an executable specification, not a static reference.

Motion performance metrics that actually matter

FPS alone is a weak indicator of motion quality. Metrics that correlate with perceived quality:

- maxFrameGap

- inputDelayToMotion

- longTaskOverlapRatio

- dropped-frame clusters

Real-world implementation:

new PerformanceObserver((list) => { for (const entry of list.getEntries()) { if (entry.duration > 50) { window.__LONG_TASKS__.push(entry); } }}).observe({ type: "longtask", buffered: true });

An animation can run at 60 FPS and still feel broken if the first visible reaction starts 300 ms too late. Users feel this instantly. Traditional metrics often miss it.

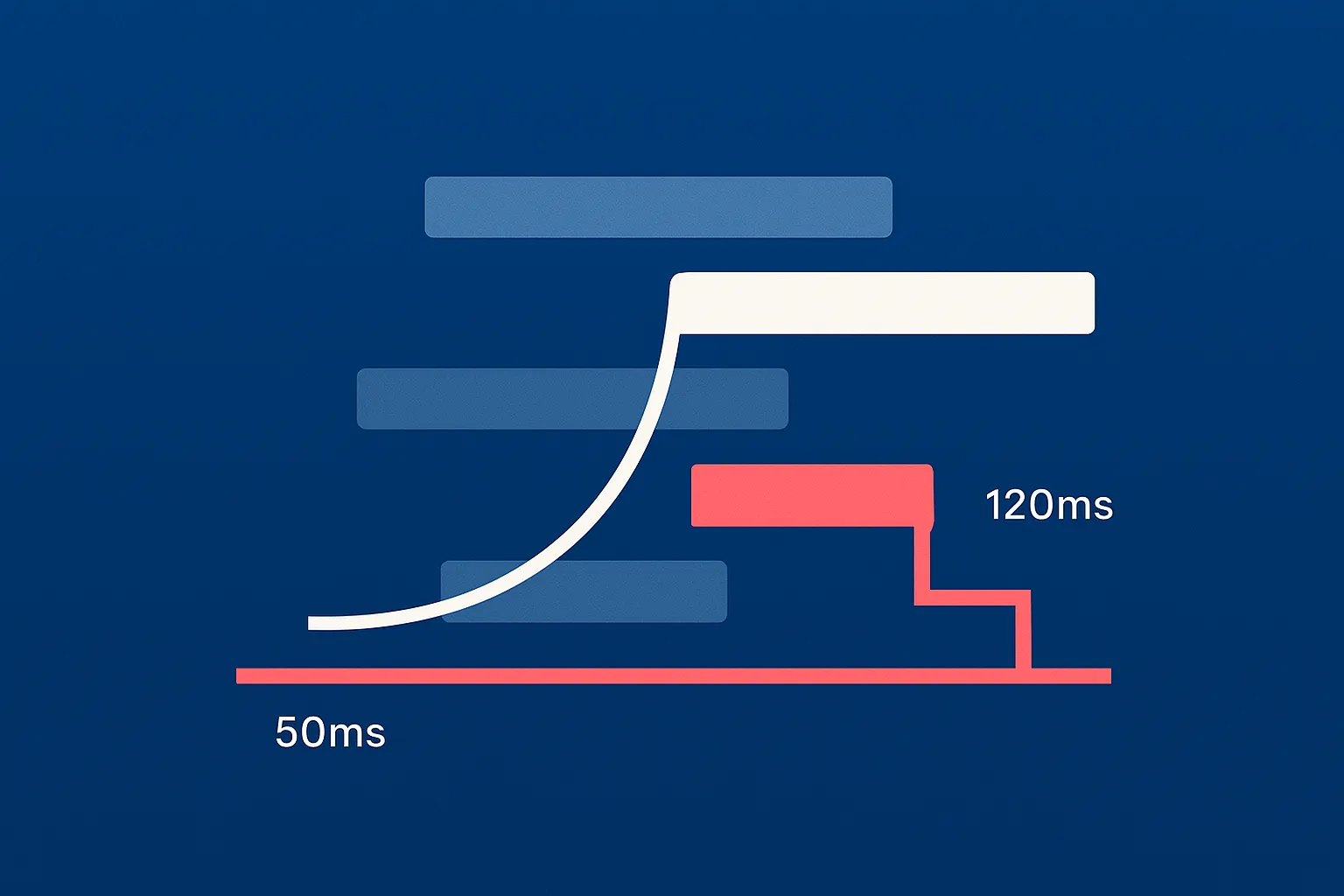

Case Study: Debugging a Silent Motion Regression in Production

A hero animation:

- multi-phase motion,

- scroll-driven,

- defines the entire first impression.

After a build optimization:

- static screenshots matched,

- average FPS unchanged,

- no obvious bugs.

Yet the designer reported:

It feels cheaper now.

Frame-based analysis

- frame 18: 4-px transform jump,

- easing curve lost a micro-slowdown phase,

- animation start delayed by +120 ms,

- overshoot removed entirely.

Root cause

The build pipeline normalized easing curves and dropped micro-timings. From an engineering standpoint — optimization. From a perception standpoint — silent brand degradation. Without animation-specific QA, this would have shipped unnoticed.

Production-Grade Acceptance Thresholds

Without numeric thresholds, animation QA is not engineering — it is opinion. Typical real-world tolerances:

| Metric | Threshold |

|---|---|

| Transform drift | ≤ 1.5 px |

| Opacity delta | ≤ 0.02 |

| Easing time drift | ≤ 12 ms |

| Input → first motion | ≤ 120 ms |

| Dropped frames (cluster) | ≤ 2 consecutive |

Exceeding any of these results in an automatic failure.

QA Checklist for Motion Fidelity

Motion geometry

- start and end positions,

- path stability,

- absence of micro-jumps.

Timing

- delays,

- durations,

- phase orchestration,

- multi-element synchronization.

Easing

- curve integrity,

- deceleration correctness,

- overshoot preservation.

Performance

- response delay,

- frame stability,

- CPU-pressure behavior.

Cross-browser identity

- Safari vs. Chrome compositing,

- Firefox vs. WebKit rendering,

- mobile vs. desktop GPU behavior.

Interaction layer

- scroll coupling,

- hover interrupts,

- retrigger reliability,

- cancellation stability.

Degraded conditions

- CPU throttling,

- network latency,

- main-thread contention.

Why Traditional Tests Fail at Motion

- E2E tests validate only final state.

- Visual regression captures only one moment.

- Unit tests verify logic, not perception.

Animation QA therefore requires a hybrid system:

frames + timing + executable motion contracts + controlled human validation

Remove any layer — and motion immediately becomes opaque.

CI Reality: Where This Actually Runs

Frame-based diffing does not run on every PR in real pipelines.

Operationally, it runs:

- on visual-critical labels,

- on motion-heavy scenes,

- in nightly or pre-release pipelines.

This keeps pipelines:

- fast,

- deterministic,

- operationally sustainable.

Product Impact: Why This Directly Affects Business Metrics

Users do not consciously analyze easing curves. They subconsciously judge quality, trust, and credibility through motion.

Typical A/B impact observed in production systems:

| Metric | Before | After |

|---|---|---|

| Hero interaction start | 310 ms | 140 ms |

| Scroll → motion delay | 180 ms | 70 ms |

| Bounce rate | 48.2% | 44.9% |

| Time to first meaningful interaction | +0.6 s | — |

Animation QA is not “visual polish.”

It directly affects:

- perceived craftsmanship,

- emotional trust,

- conversion stability,

- retention quality.

Conclusion

In strict production terms, animation QA means:

- deterministic triggering,

- frame-by-frame regression,

- executable motion contracts,

- numeric easing validation,

- perceptual performance profiling,

- degraded-condition testing,

- cross-browser identity,

- CI-level enforcement.

An animation either behaves exactly as designed — or it is a defect. There is no middle state. True animation quality is not visual beauty. It is behavioral precision under control.

Dec 26, 2025