Introduction

Test automation was supposed to free teams from repetitive tasks. In practice, many QA teams spend more time maintaining tests than creating them.

- Minor UI changes break dozens of tests.

- Writing test cases lags behind fast-moving feature releases.

- Pipelines stall because automation collapses over trivial issues.

Instead of accelerating delivery, automation has become a drag on it. Traditional approaches have reached their limit. They simply don’t scale with today’s release pace.

The next step is Agentic AI: not just ML-powered selectors or bug classifiers, but autonomous agents that understand goals, build plans, and adapt in real time. This isn’t “another tool.” It’s the future of QA.

What Makes Agentic AI Different?

Conventional AI in testing: input → prediction.

Agentic AI: goal → plan → actions → correction → learning.

Instead of brittle scripts, agents:

- analyze context and find alternatives,

- learn from past outcomes,

- execute business flows the way real users do.

This represents a fundamental shift, from testing individual buttons to validating business goals, such as completing a purchase, registering an account, or generating a report.

Key Use Cases

1. Test generation from the interface

Traditional approach: QA engineers manually script test flows, resulting in patchy coverage.

Agent approach: an agent explores the UI, maps elements, and generates end-to-end scenarios.

Mini-case: In an e-commerce project, an agent generated flows covering search, filtering, cart, and payment.

The result: 40% less time spent on test design, with broader coverage including edge cases that manual QA wouldn’t have captured in time.

2. Self-healing tests

UI changes constantly. In traditional automation, renaming a button can break dozens of tests.

Agent approach: if a locator fails, the agent checks text, position, and context to continue execution.

Example: changing submit-btn to submit-button. A regular script fails. The agent recognizes the element by meaning and proceeds.

Business impact: fewer false failures, reduced maintenance overhead, more stable CI/CD.

3. Continuous monitoring in production

Monitoring today usually means uptime and infrastructure metrics. But users care about flows, not servers.

Agents can continuously perform key business journeys: sign-up, login, checkout, rep

Real effect: In a SaaS platform, an agent detected performance degradation in report generation right after a release. Infrastructure metrics were green, but the business function was broken. The issue was resolved within an hour, before complaints piled up.

Risks: Beyond the Marketing

1. Security

An agent with system access is a potential liability. In one pilot, an agent accessed a test admin panel and deleted all orders, including valuable test data. Recovery required restoring from backups.

Lesson: sandboxing and the principle of least privilege are non-negotiable. Agents need strict access boundaries.

2. False positives

Agents can overreact. In one case, an agent flagged a checkout flow as “broken” because the page loaded more slowly than usual. QA wasted half a day proving there was no defect.

Lesson: don’t unleash agents without human oversight. Start with a human-in-the-loop model.

3. Transparency

If an agent makes decisions for opaque reasons, trust evaporates. QA needs clear logs: what action was taken, why, and what outcome followed. Without observability, agents are just black boxes.

Agentic AI Tools in QA

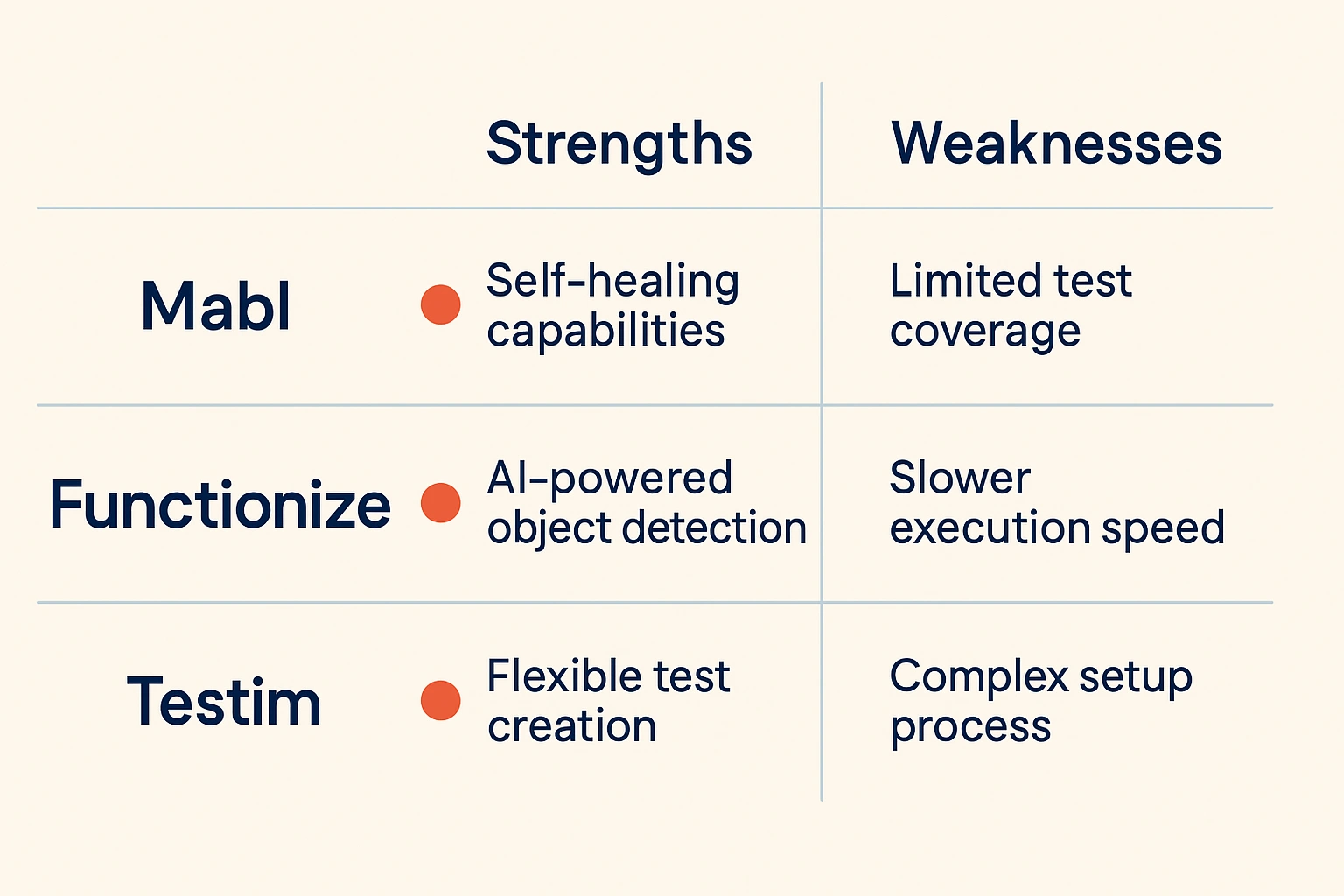

- Mabl: good at generating scenarios, but struggles with highly customized UIs.

- Functionize: strong ML for locators, but limited customization and tied to its cloud.

- Testim: focuses on self-healing selectors, but still requires heavy manual configuration.

These are useful, but they’re AI assistants, not true autonomous agents.

On the open-source side:

- Playwright’s auto-healing locators are a step forward, but still selector-centric.

- Experiments with GPT-4 and Claude show promise in generating tests from natural language, but remain prototypes.

The market is in its early stages. The trajectory is clear: from smarter selectors to fully agentic systems.

Why This Matters for Business

Agentic AI is not a shiny QA toy — it’s a business lever:

- Faster releases. Pipelines don’t collapse over trivial UI changes.

- Lower costs. Less time wasted on test maintenance.

- Better UX quality. Real business flows are tested, not only isolated buttons.

Case: in a fintech company, an agent caught a calculation error in loan repayment logic during production monitoring. Infra metrics looked fine. The potential financial impact was in the tens of thousands of dollars. The bug was fixed before users noticed.

Practical Steps to Get Started

- Start small. Choose one flow, like “search → order.”

- Restrict access. Run agents in sandboxes with test accounts and detailed logging.

- Add human feedback. Engineers must confirm or reject agent findings early on.

- Scale gradually. Move from UI flows to business-critical processes.

- Invest in observability. Dashboards and logs build trust.

QA as an Orchestrator of Agents

Within 2–3 years, QA engineers won’t be scripting endless flows. They’ll be orchestrating agents: one for functionality, one for performance, one for security.

QA shifts from execution to strategy. They set priorities, interpret results, and guide agents. Teams that embrace this will move faster. Teams that don’t will be stuck fixing broken locators while competitors ship features.

Conclusion

Agentic AI is the next stage in QA evolution. Traditional automation has peaked.

- Agents reduce test maintenance by significant margins.

- They validate business journeys, not just UI fragments.

- They prevent costly incidents and accelerate delivery.

Yes, there are risks: security, false positives, lack of transparency. Ignoring them is dangerous. But one truth is clear: QA engineers who adopt agentic approaches today will lead tomorrow. The rest will still be fixing locators.

Dec 9, 2025