Containerization, and most importantly Docker, has been all the rage lately among developers and web development specialists. Some compare it to virtualization but others consider it the start of a new era of cloud computing. Yet, with so much talk about it, I've noticed that not many people who are not developers give it a second thought. They only seem to care about containers when it means moving to the public cloud or monitoring some container-based infrastructure (and who can blame them). That's exactly why this article has been written.

We’re in the midst of technological shifts that are disrupting everything we know about development, testing, and deployment. There is a new way to work — a new way to think about infrastructure, application deployment, and DevOps. As these shifts reshape engineering culture, some organizations choose to work alongside a seasoned custom web app development firm to align their infrastructure and deployment models with modern container-first standards.

Containerized apps allow for rapid deployment and consistent environments across different platforms. Gone are the days when developers deploy code from their laptops (and pray it works when it hits production).

Containerization is one of the most promising approaches to modernizing software development. It’s already transforming some of the biggest applications in the world and making an impact in a number of trends you’ve likely heard people talk about, such as microservices, cloud computing, and serverless computing.

Introduction to Containerization in Web Development

Application packaging in a standardized unit for software development, deployment, and management is known as containerization. When internal capacity is limited, teams sometimes work with web app development agencies to accelerate adoption and align implementation with existing delivery processes. Enterprises have embraced containerization, one of the most well-liked DevOps techniques, in recent years.

Containers are virtual software packages that run on top of an existing OS and are small, portable, and self-sufficient. For executing applications in isolation from other applications on the same physical server, they can be used as a substitute for virtualization. Container images, which can be shared between different workstations, are used as templates for building containers.

Containers Vs Virtual Machines

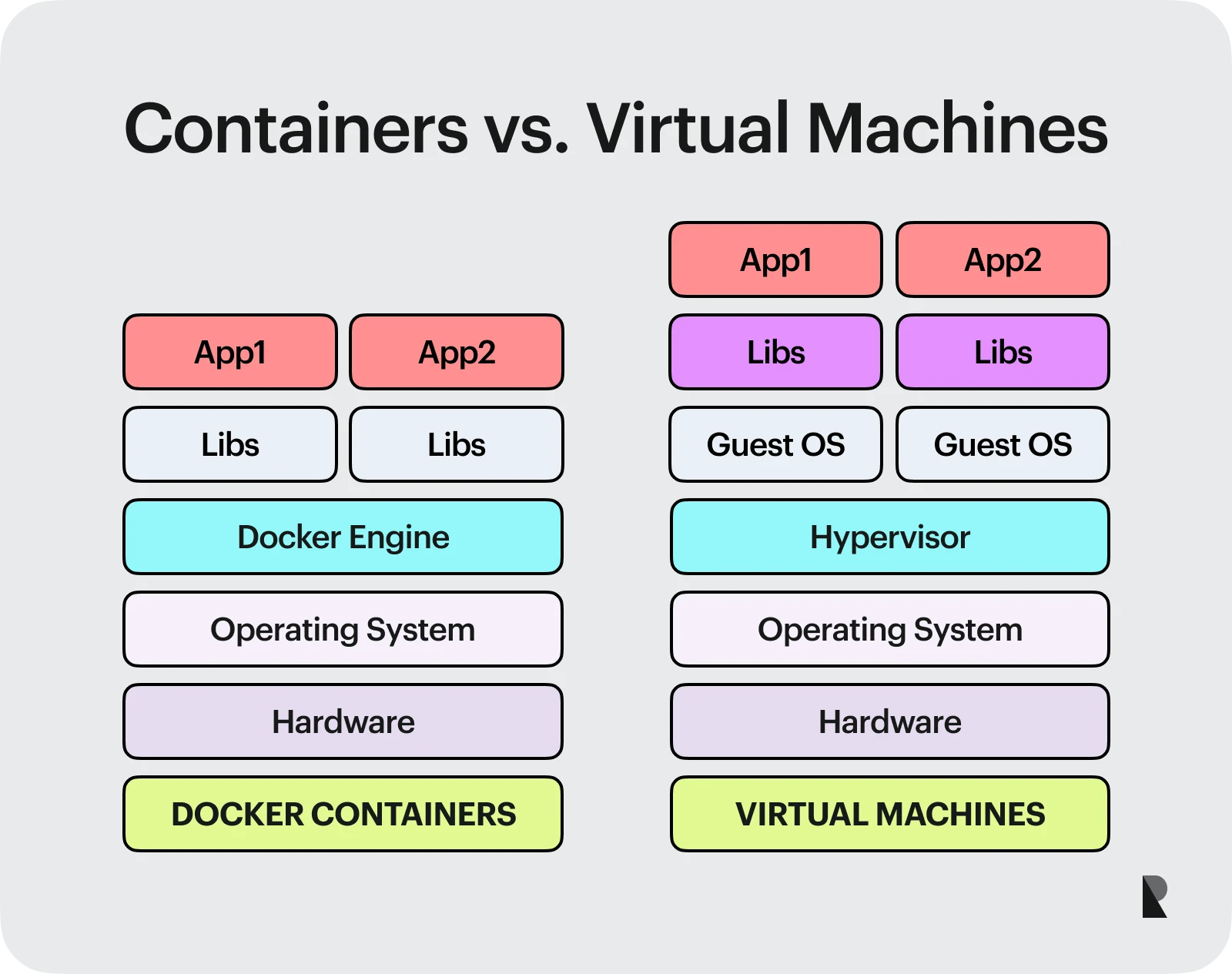

Containers have some similarities with virtual machines (VMs), but there are also some important differences:

- A virtual machine (VM) is a complete operating system that supports numerous programs. Each application receives a separate virtualized instance of the operating system, which means it has its own kernel and drivers. In what is known as user space isolation, each VM runs on top of the host's kernel and has its own memory area. Unlike multiple vms, which need distinct devices, containers simply use a portion of the resources of their host OS and share the same kernel. Because they don't require as much overhead for each application instance and are more portable (you can execute a container anyplace that supports Docker), containers are lighter than virtual machines (VMs).

- Similar to virtual machines (VMs), containers let you construct and run applications without needing to make a complete copy of the operating system. Under the hood, Docker wraps your program and its dependencies in LXC (Linux Containers).

- As a result, you can run numerous containers on the same host without worrying about overlapping application dependencies or incompatible ports. For instance, if your application needs both Apache and MySQL to function, you could make two different containers—one for each—and link them together to allow everything to function as it should.

- Docker is designed to be both lightweight and portable. It can run on any Linux distribution and provides a command-line tool for interacting with it. The Docker engine doesn’t require any additional software except for the kernel features it uses (such as cgroups).

Benefits of Containerization

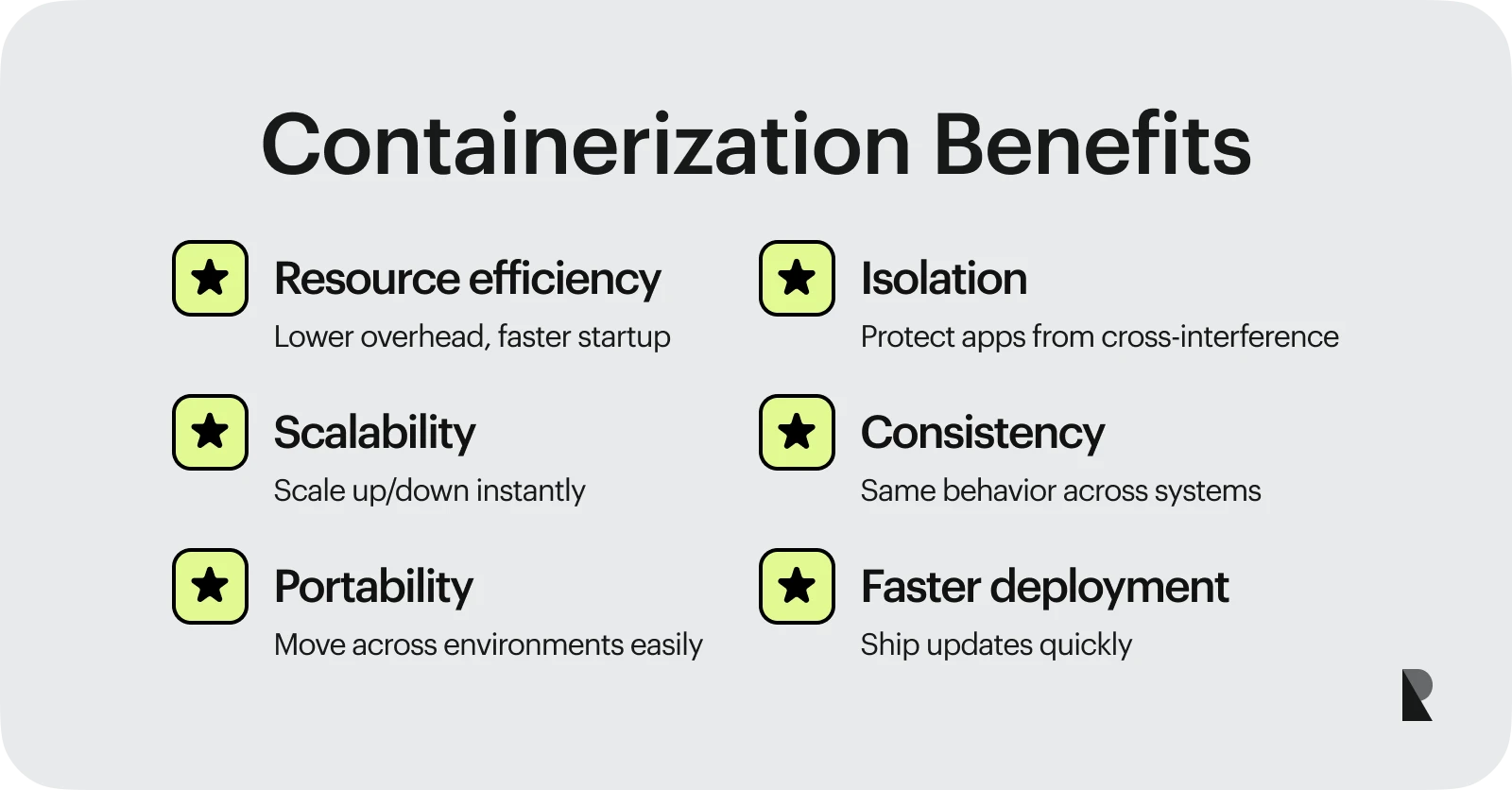

Improved resource efficiency

Containers are much more efficient than the traditional approach of running applications on a virtual machine. Because they share the kernel with the host operating system, containers can be started and stopped quickly without having to boot up an entire virtual machine. This is especially useful in cases where you need your application to run continuously—for example, web servers or databases that handle traffic 24 hours a day and driving higher server efficiencies.

Improved Scalability

Containers improve the scalability of your applications by allowing you to easily scale up or down based on demand. This is because containers are lightweight and run in isolation, which means they don’t have any impact on other isolated processes running on the same host machine. They also allow you to quickly spin up new instances when necessary—for example, using Docker Compose or Kubernetes for orchestration.

Containers allow you to run multiple applications on a single server, each with its own private environment. This allows you to scale your application as your needs grow by adding additional servers and containers.

Improved portability

Containers can be moved from one host to another without having to change their configuration, making them much easier to deploy than virtual machines. This allows you to move your applications between cloud providers and physical servers without any downtime or additional configuration. For example, you could run your container on a server in AWS and later move it to Google Cloud.

Isolation

Containers provide an isolated environment for each application, which allows you to run multiple applications on a single server and prevents them from interfering with each other. This is especially useful if one container has a security vulnerability or becomes compromised. The isolated environment prevents the vulnerability from spreading to other containers and the host server.

In addition, containers provide an isolated environment for each application, which allows you to run multiple applications on a single server and prevents them from interfering with each other.

Consistency advantages

Containers provide a consistent runtime environment for applications, which allows you to deploy applications across multiple servers or platforms with the same configuration and dependencies. This consistency helps ensure that your applications behave the same way regardless of where they run.

Faster deployment

Containers are faster to deploy than virtual machines because they don’t require any configuration or installation. You can use a container image that has all the software and dependencies you need, run it on any system capable of running Docker, and start using it immediately. This makes containers ideal for deploying new versions of your applications quickly without having to wait for additional configurations or installations.

Understanding Containers

Containers are a way of packaging up an application with all its dependencies into a self-sufficient entity that can run on any Linux system. They are similar to virtual machines, except they run faster and use fewer resources. A container image is a collection of files that defines the configuration of your application and any system libraries or frameworks it depends on. When you run a container from an image, the image’s contents are copied into memory and executed as though they were running directly on your computer without needing to install anything.

The need for containers

The need for containers is driven by two factors. First, applications are getting more complex as they embrace microservices architectures and service-oriented architectures. Each application in such an environment consists of multiple components that need to be deployed separately for each environment—development, test, staging, and production—and managed independently of one another. Second, containers provide a way to package your application with all its dependencies into a self-sufficient entity that can run on any Linux system without needing to install anything on the host machine.

Containers are not the same as virtual machines (VMs). They don’t provide full isolation like VMs do; rather, they share the host operating system with other containers. But they offer a level of abstraction that simplifies deployment and resource management.

Applications running inside containers also benefit from improved security because they can be isolated from the host operating system. This means less risk of malicious attacks compromising your data or causing downtime. It also means that if you want to update an application's code, you don't have to worry about breaking anything else on your server or in your network.

Benefits of containers

- Lightweight: Containers are lightweight, which means that they're easy to move around and they don't require a lot of resources. This is especially true if you use serverless computing.

- Scalable: Containers are also highly scalable because they can be deployed across multiple nodes or machines at once. This allows you to scale more quickly than other types of infrastructure would allow you to.

- Secure: Containers are also very secure because each one runs in its own instance so there's no way for an attacker to gain access to other containers or your server itself.

- You can also use containers as part of your Continuous Integration/Continuous Deployment (CI/ CD) pipeline because they're very easy to test and verify. Containers are based on open standards like Docker and Kubernetes, which means that they're interoperable with other technologies — including other container tools and cloud services like AWS ECS.

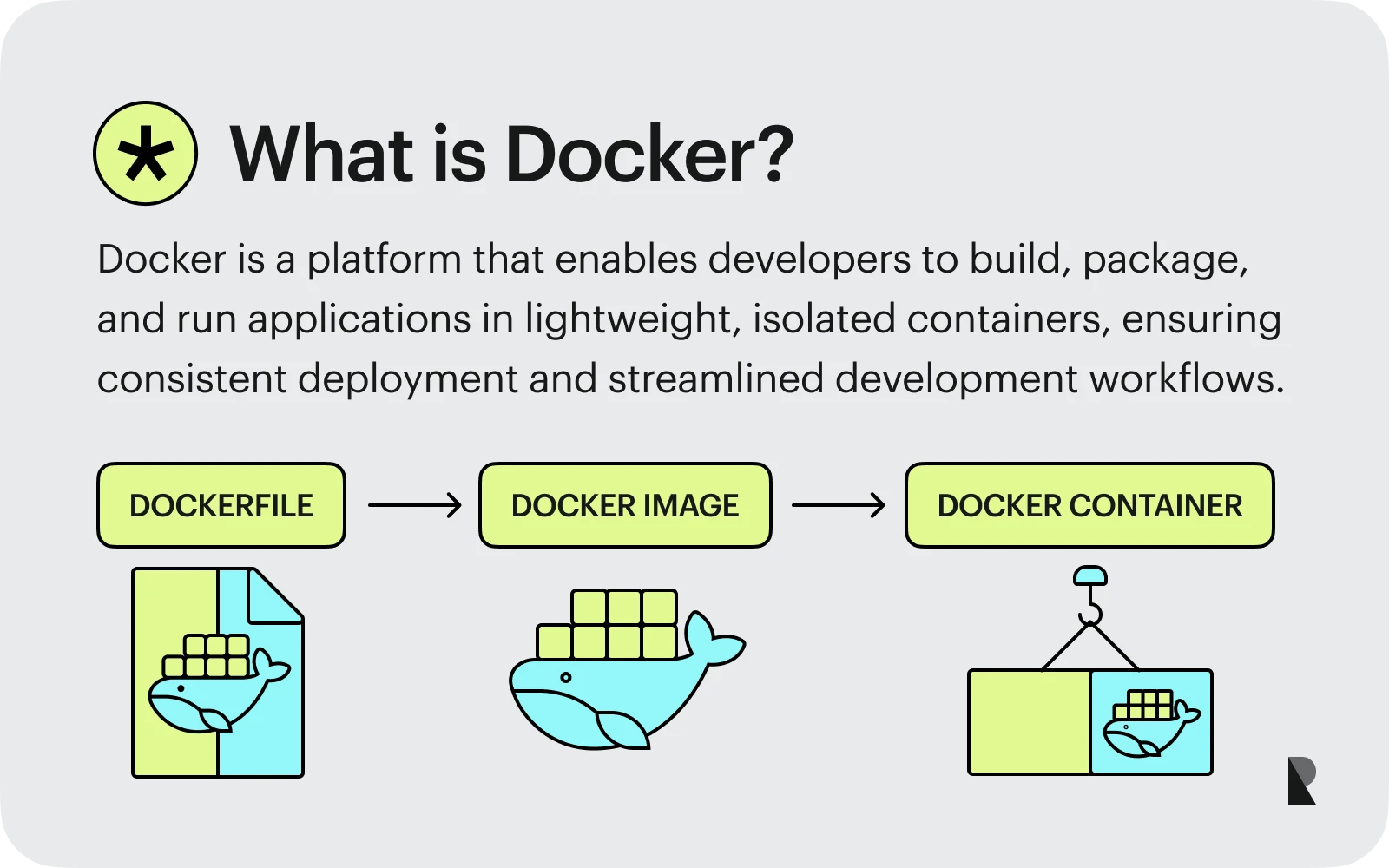

Introduction to Docker

The three main components of Docker:

1. Dockerfile

All the commands an application requires to construct an image are contained in a text file called a Dockerfile, along with other metadata like the image's name. The docker daemon will read this file in order to build an image based on these instructions.

All the commands that are executed when you construct an image are listed in the Dockerfile. The instructions to construct one or more docker containers based on the base image are also specified.

2. Docker Image

A read-only template called a Docker image includes all the layers and metadata needed to run an application. It is the design for making a container, not the container itself. This implies that when you start a container from an image, your program will be ready to use because it already contains everything you need to run it.

A Dockerfile, a text file that includes all the commands an application needs to construct an image as well as additional metadata like its name, is used to build a Docker image. The docker daemon will read this file in order to build an image based on these instructions.

3. Docker Engine

The Docker platform's heart is the Docker engine. It coordinates the lifecycle of containers and manages them. Although it can be used independently to generate and manage containers, most users pair it with additional tools like Docker Swarm or Kubernetes.

A lightweight virtualization library called libcontainer serves as the foundation for Docker Engine. Linux namespaces are used by Libcontainer to offer separation between processes and mount points for file systems. As a result, several containers can run simultaneously on a single Linux kernel instance.

Containerizing Web Applications

The process of containerizing a web application can seem daunting at first, but the benefits are worth the effort. Here's the process of containerizing a web application:

Writing a Dockerfile

Create your Dockerfile, which specifies the steps needed to build an image.

The Dockerfile should be stored in the root of your project directory. Here's an example Dockerfile that builds a container running on port 80:

# Use an official Python runtime as a parent imageFROM python:3.9-slim # Set the working directory to /appWORKDIR /app # Copy the current directory contents into the container at /appCOPY . /app # Install any needed packages specified in requirements.txtRUN pip install --trusted-host pypi.python.org -r requirements.txt # Make port 80 available to the world outside this containerEXPOSE 80 # Define environment variableENV NAME World # Run app.py when the container launchesCMD ["python", "app.py"]Building a Docker image

Run docker build to create the Docker image. By default, this will use the directory structure of your current working directory as the context for building the image. In this case, it would create a container based on your web application code.

docker build -t clientapp .Running the application in a container

Once you've built the image, you can run it with docker run. The following command will start a container based on that image:

docker run --name clientapp_c -p 3000:3000 -d clientappManaging and deploying the containerized application

Now you can push the above image to a registry like Docker Hub or Amazon ECR and use it as the base for all your projects. You can also use tools like Docker Compose and Kubernetes to build, deploy, and manage multiple containers in a single application stack.

Conclusion

Containers are a great way to deploy and manage applications in production and development environments. When combined with tools like Docker Swarm or Kubernetes, they can be used to build highly available, scalable platforms that run on any infrastructure. If you’re looking to improve the performance, security, and manageability of your software applications, Docker containers are a great option. In this guide, we’ve covered how Docker works and how to use it for development and production environments.

To summarize, containerization is a game-changer for web developers, testers, and deployment engineers. Cheaper and faster access to high-power computing environments means less waiting time for developers and more flexibility during development. Containerized applications are more robust during deployments, thanks to their self-contained natures. Containerization has made the container an essential part of modern web development—for anyone who's serious about application code release.

May 8, 2023