When Control Becomes an Illusion

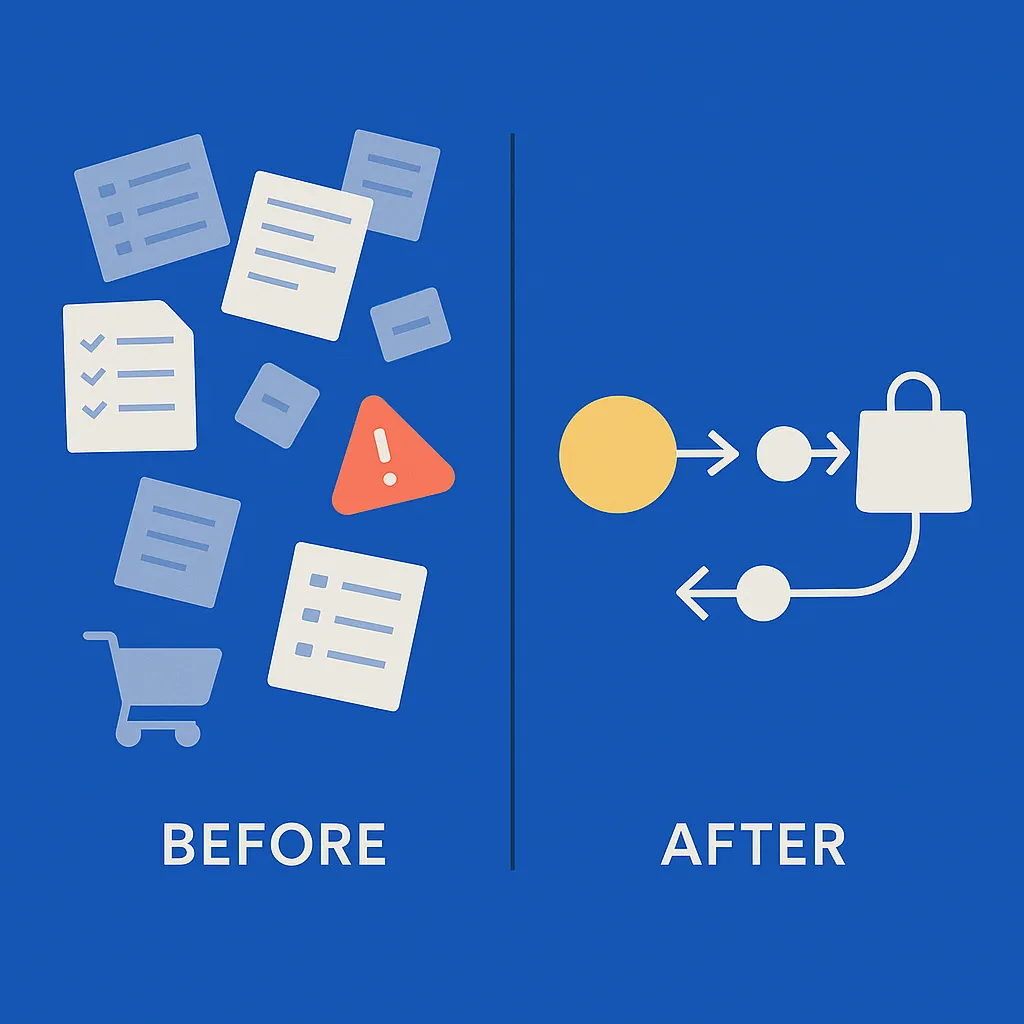

For years, test cases were the backbone of QA — a structured set of steps, expected results, and pass/fail statuses. It worked when products were linear and releases happened monthly.

But the landscape has changed. CI/CD pipelines push updates daily. Interfaces are dynamic, personalized, and driven by design systems. In this world, a test case no longer captures value — it captures a moment in time.

QA teams end up maintaining documentation rather than confidence. The irony: the more test cases you have, the less control you get. When a suite grows to thousands of records, it stops being a safety net. A step like “click the button” becomes obsolete faster than the build reaches staging.

QA as a Mirror of the Business

Scenario-driven QA shifts focus from verification to understanding.

A scenario is not a checklist — it’s a story about a user’s intent and the business goal behind it.

Example:

- A user wants to place an order with an active coupon.

- They should see the final discounted price and successfully complete payment.

No clicks are described, yet the business meaning is clear. QA stops thinking in steps and starts thinking in consequences.

Why Scenarios Outperform Test Cases

1. Change Resilience

In a CI/CD world, step-based test cases decay quickly. Scenarios survive because they’re anchored to goals, not UI details.

2. Lower Cognitive Load

Testers think in terms of “what matters to the user,” not “what’s in step 5.”

3. Deeper Product Involvement

Scenario-driven QA requires understanding business logic and data, not just interface states. QA becomes a co-author of the product experience.

4. Cross-functional Alignment

A single scenario can represent a full user journey — aligning QA, UX, and product around the same goal.

How to Move from Test Cases to Scenarios

Step 1. Audit Existing Coverage

Eliminate duplicated or outdated cases. Keep only unique user journeys that bring measurable value.

Step 2. Define Business Goals

For each domain area, ask:

“What business outcome does QA protect?”

Example: “Ensure checkout completion for all cart types.”

Step 3. Write Scenarios

Each should describe:

- Context — where the user starts;

- Action — what they do;

- Expectation — what outcome matters;

- Variants — what happens when things deviate.

Step 4. Link with Automation

A scenario defines intent. Automation verifies implementation. Store scenarios in Notion or Git and link them to CI via IDs or metadata.

Example: Scenario Definition in YAML

id: SCN-102title: "Quick purchase with active coupon"context: user_state: "logged in" cart_state: "1 item, active coupon"steps: - action: "Open product page" - action: "Click 'Buy Now'" - validation: "Payment screen opens" - validation: "Discount of 10% is applied"expected_result: "Purchase completes successfully with correct total"linked_tests: - id: AUT-209 framework: "Playwright" path: "tests/e2e/purchase_with_coupon.spec.ts"metrics: business_goal: "Checkout conversion rate" owner: "QA Lead"Everyone — from QA to product managers — can read and understand this format. It lives in Git, gets reviewed like code, and integrates directly into CI/CD.

Traceability: From Scenario to Metric

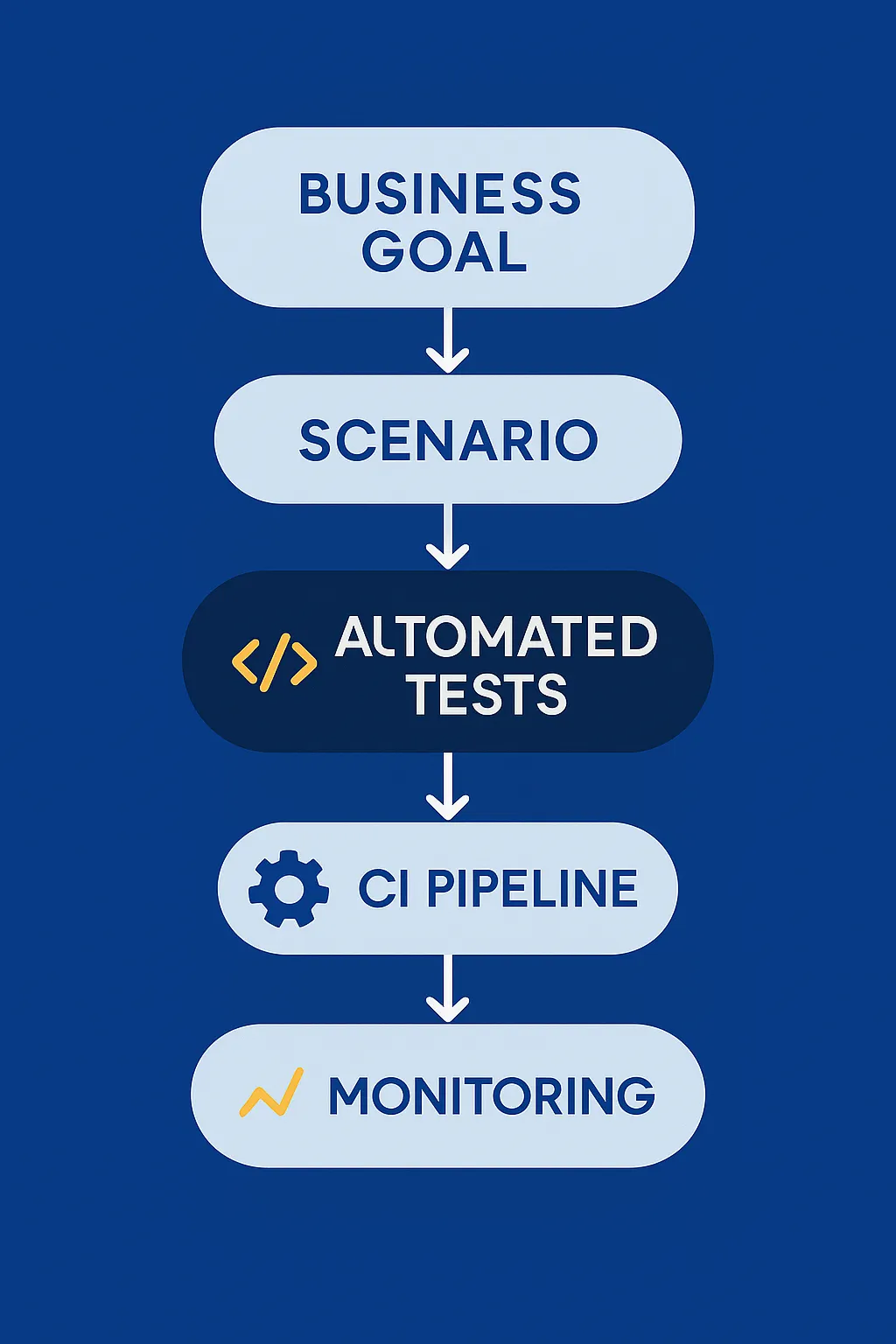

Traceability architecture:

Business Goal ↓Scenario (SCN-XXX) ↓Automated Tests (AUT-XXX) ↓CI Pipeline (build #, report) ↓Monitoring & Metrics (Grafana / Datadog)Each layer is connected through IDs. The CI pipeline automatically pulls related scenarios, executes linked tests, and reports results to Slack or Jira.

Example (GitHub Actions configuration):

name: Scenario-driven QAon: [push]jobs: run-tests: runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - name: Load scenarios run: cat scenarios/*.yaml - name: Run linked Playwright tests run: npx playwright test --grep @SCN-102 - name: Report results run: node scripts/report-to-slack.jsThis ensures transparency: scenarios become the single source of truth for QA, CI, and business teams alike.

Case Study: E-commerce Shift to Scenario-Driven QA

Context:

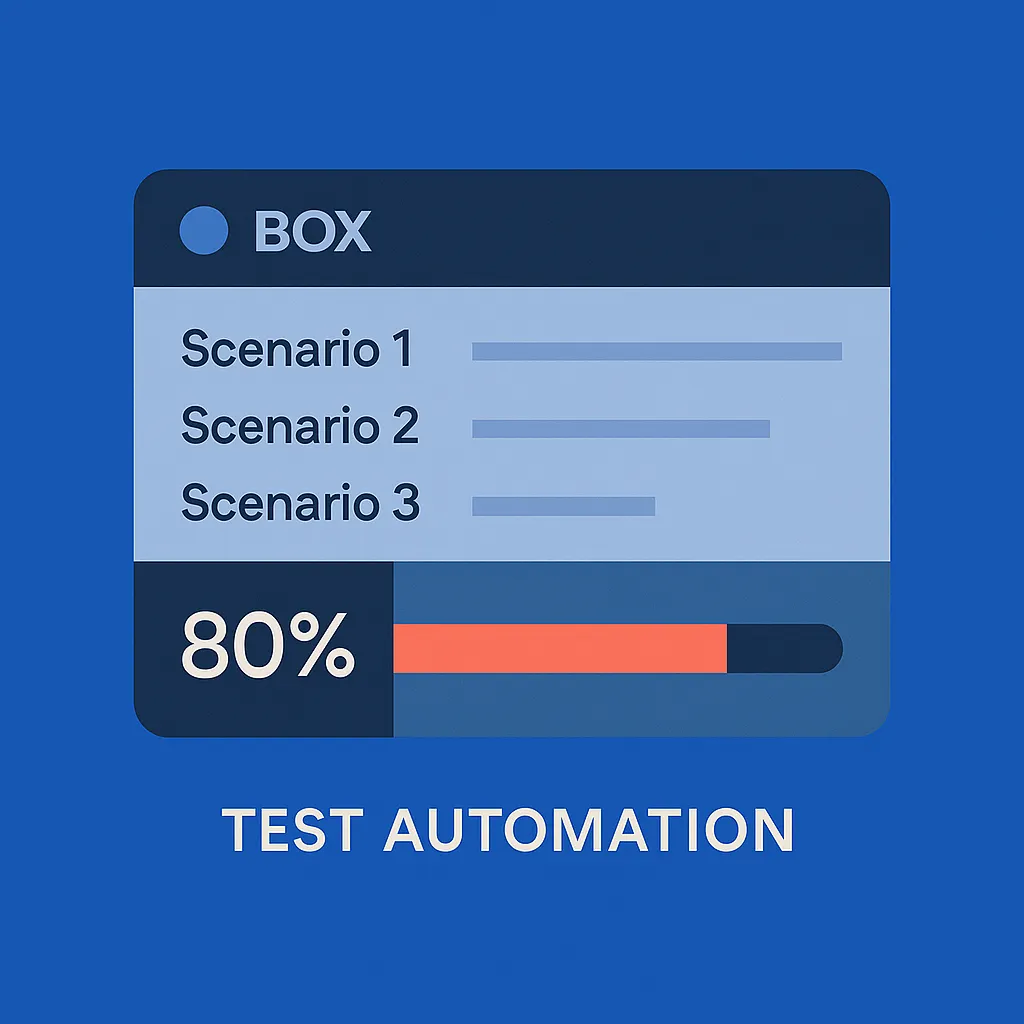

A large e-commerce QA team maintained over 1,200 test cases in TestRail.

After migrating to design-system-driven UI and continuous deployment, regression cycles ballooned to 3.5 days, with 30% of cases obsolete after each release.

Action:

The QA architect initiated a shift to scenario-driven QA:

- Reduced 1,200 test cases to 240 meaningful scenarios.

- Defined 12 key business journeys — from first visit to payment.

- Stored scenarios in Git and linked them to Playwright and Lighthouse tests.

- Published CI results to Slack for full-team visibility.

Results after 3 months:

| Metric | Before | After |

|---|---|---|

| Regression duration | 3.5 days | 11 hours |

| Case relevance | 70% | 95% |

| Defects found pre-release | — | +18% |

| QA involvement in discovery | — | +40% |

Measuring Success

| Category | Metric | Outcome |

|---|---|---|

| Coverage | # of key business scenarios | Fewer, but more meaningful |

| Release speed | Average regression duration | Reduced by 2–4× |

| Quality | % of issues found pre-release | Increased |

| Transparency | Scenario visibility for UX/Product | Full |

| Artifact relevance | Outdated cases | Nearly eliminated |

Risks and Anti-Patterns

- Fake Scenarios. Simply renaming test cases as “scenarios” without changing mindset.

- Lost Traceability. Scenarios disconnected from metrics or CI lose purpose.

- No Ownership. Every scenario needs a responsible owner — not just a file maintainer.

- QA Isolation. Without UX and product involvement, scenarios quickly drift from reality.

The New Role of QA

Scenario-driven QA redefines quality work:

- QA as Analyst: understands business context and data dependencies.

- QA as UX Curator: identifies where flows break.

- QA as Integrator: connects APIs, UI, and business rules into a unified logic of quality.

Quality becomes an ongoing conversation — not a checklist at the end of a sprint.

Building a Culture of Scenario-Driven Quality

For scenario thinking to thrive, teams need three conditions:

- Shared Goals: QA, product, and design measure success in the same terms — user value.

- Transparent Artifacts: Scenarios are open to everyone. They are part of the product’s documentation, not internal QA notes.

- Continuous Feedback: Each scenario deviation is treated as a learning signal, not a failure.

Postscript: Scenarios as a Language of Trust

A test case answers: “Does the function work?”

A scenario answers: “Does the business work?”

When QA thinks in scenarios, a product becomes more than a sum of features — it becomes a system of intentions.

That’s what mature quality really means: understanding causes, not controlling effects.

We build QA processes around user scenarios, not checklists.

That’s what turns quality from a control mechanism into a natural outcome of understanding the product.

Oct 29, 2025