Introduction

Quality Assurance is often treated as an afterthought: testers join at the end of a sprint, raise a batch of defects, and the team squeezes in fixes just before release. That can work for a while, but over time deadlines move, marketing plans slide, and team energy erodes under a backlog of late rework.

We take a different path. Integrated QA is baked into a project from the first sketch of an idea. It stays low-key—no extra ceremonies—yet it reliably saves weeks of correction, protects budgets, and keeps weekends free. Below we explain how this quiet QA layer turns stressful launches into steady flow, which business signals prove the benefit, and how to adopt the practice without new bureaucracy or headcount.

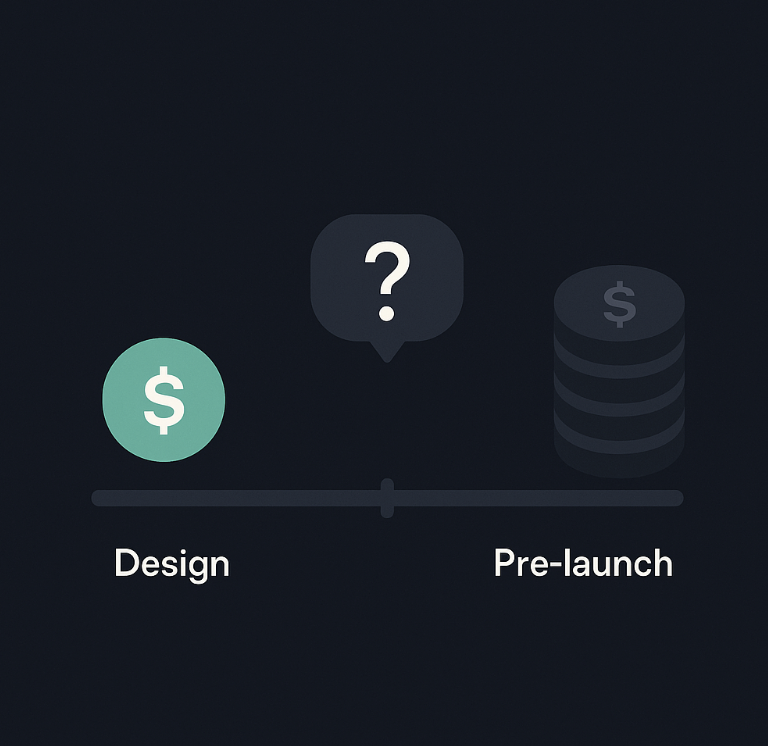

Why Early QA Pays Off for Marketing Sites

Every launch cycle begins with optimism: polished mock-ups, a motivated team, a tight timeline. Then, as go-live day approaches, a wave of last-minute tickets appears: How does the hero video behave on a slow 3G connection? What does the page look like on a 320-px mobile screen in landscape? Is the primary CTA tracked correctly? Does the layout hold up in Firefox or in the latest releases of Chrome and Safari?

These are perfectly reasonable questions—just raised at the priciest possible moment. Designers rush to tweak breakpoints, engineers patch scripts, PMs juggle scope, and marketing delays the campaign. Nobody is careless; the issue is timing. Critical questions land when answers cost the most. Integrated QA flips that order: the same issues surface while wireframes are still fluid, and fixes take minutes instead of days.

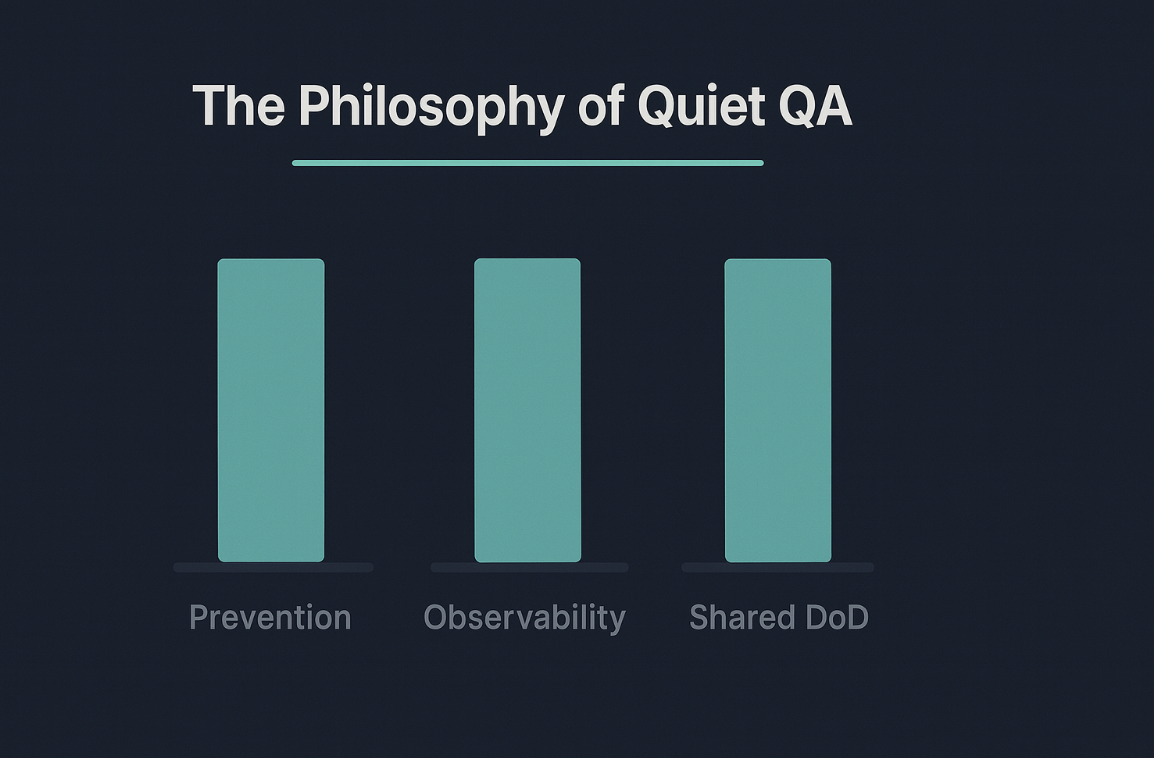

The Philosophy of Quiet QA

Integrated QA rests on three simple convictions:

- Prevention is cheaper than detection. A flaw spotted in a rough Figma mock-up takes minutes to adjust; the same flaw in production takes hours—and reputation points—to repair.

- Observable beats guessable. If behavior can’t be measured, people will argue whether it works. Any critical path should be verifiable by a button, a metric, or an alert.

- Quality is a shared definition, not a phase. When designers, PMs, engineers, and QA speak the same language of “done,” testing shifts from policing to co-navigation.

These ideas are obvious; the power lies in applying them consistently at every stage.

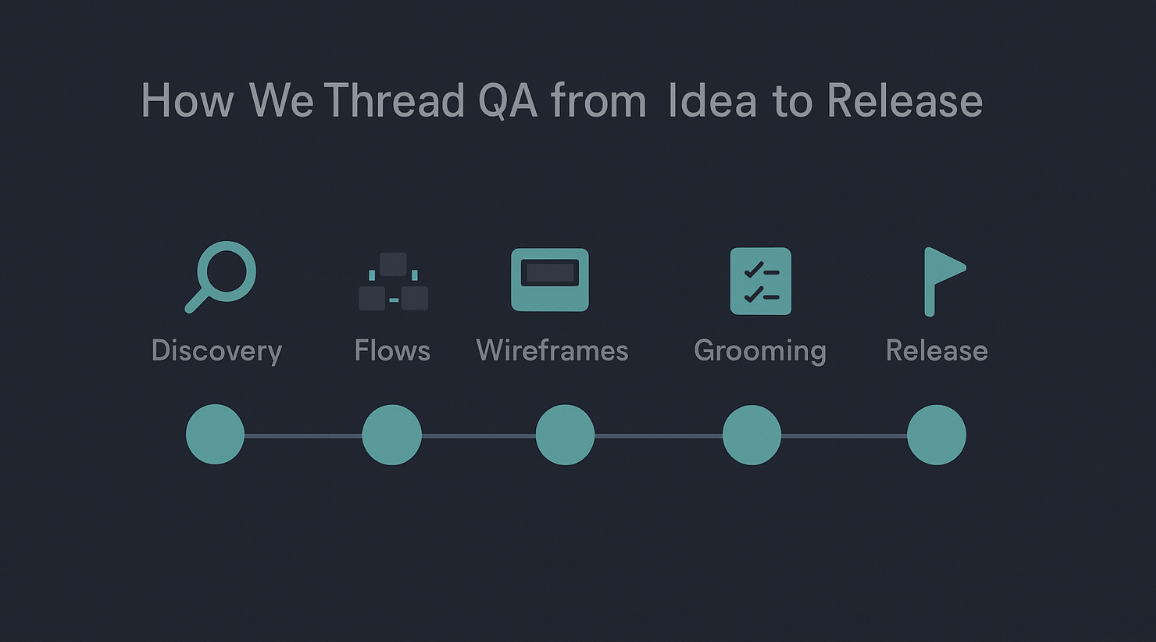

How We Thread QA from Idea to Release

Discovery

While stakeholders discuss value propositions, QA asks the quietly valuable questions: compliance, localization needs, performance constraints, analytics goals. Answers land in the brief before a designer even opens Figma.

User-flow mapping

As journeys are sketched, QA hunts for invisible states: timeouts, permission errors, empty content, fallback routes. Those branches are added to the flow so “unhappy” paths are designed on purpose.

Wireframes and hi-fi mock-ups

QA leaves pinpoint comments where decisions are made: focus order after validation, empty-state messaging, rate-limit copy, cookie-consent interactions. Most comments resolve instantly; a few trigger redesigns while pixels are still cheap.

Grooming and sprint planning

By planning time, acceptance criteria already live next to designs. QA sizes test effort, flags tooling needs (preview URLs, smoke checks), and records known risks. No one discovers an “impossible-to-test” step on day seven.

Release and retro

On release day QA brings a small list titled “Things that never broke.” In retro the team notes the calmest deploy of the month and loops the lessons back into design standards.

Compounding Wins of Early QA

Complete journeys

Edge cases, offline moments, and permission variations are mapped in design rather than discovered by support. This doesn’t make screens cluttered; it makes them resilient.

Testable specs

Measurable acceptance criteria give automation something real to assert and provide analytics with clear events to track. Ambiguity disappears from stand-ups and Slack threads.

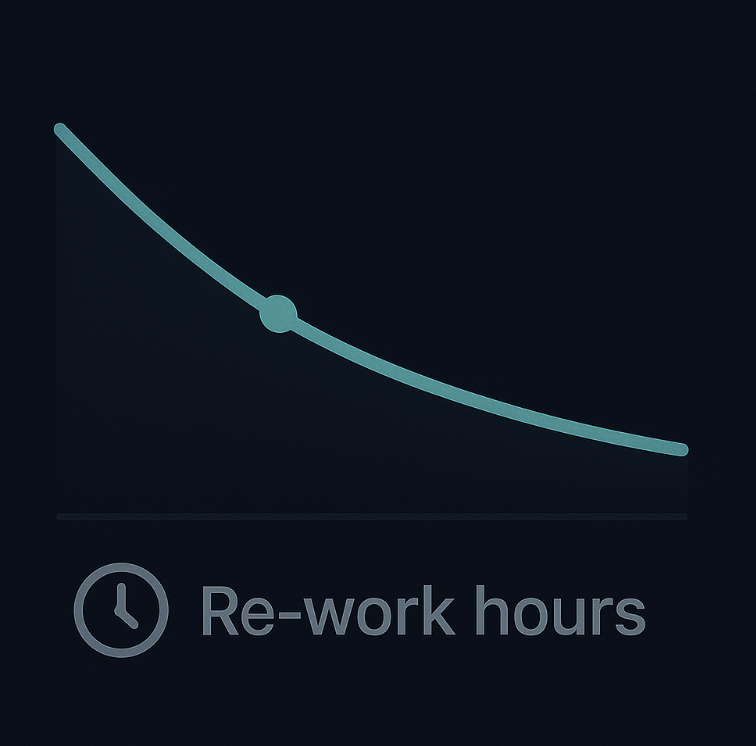

Less rework

When questions are answered before code, the team avoids painful design reversals mid-sprint. In our practice, adding QA comments in Figma reduced reopened about 35% design tickets. Internal observation across recent sprints; results vary by team and scope.

Higher velocity

Fewer clarifications and fewer blockers keep developers in flow and releases on the original date. Marketing plans stop slipping, and confidence returns to ship day.

The Silent Economics

You don’t need an elaborate dashboard to see progress. Five lightweight signals tell the story:

- defect-escape rate trends down;

- reopened tickets fall;

- lead time from code-complete to production shrinks without cutting scope;

- mean time to restore gets faster when something slips;

- and releases feel calmer and more predictable, which we see echoed in retros.

A real-world snapshot: after two QA-attended wireframe sessions, one marketing site cut design rework hours by ~60% in the next sprint. The release shipped on a weekday afternoon—no overtime, no war room. Internal observation across recent sprints; results vary by team and scope.

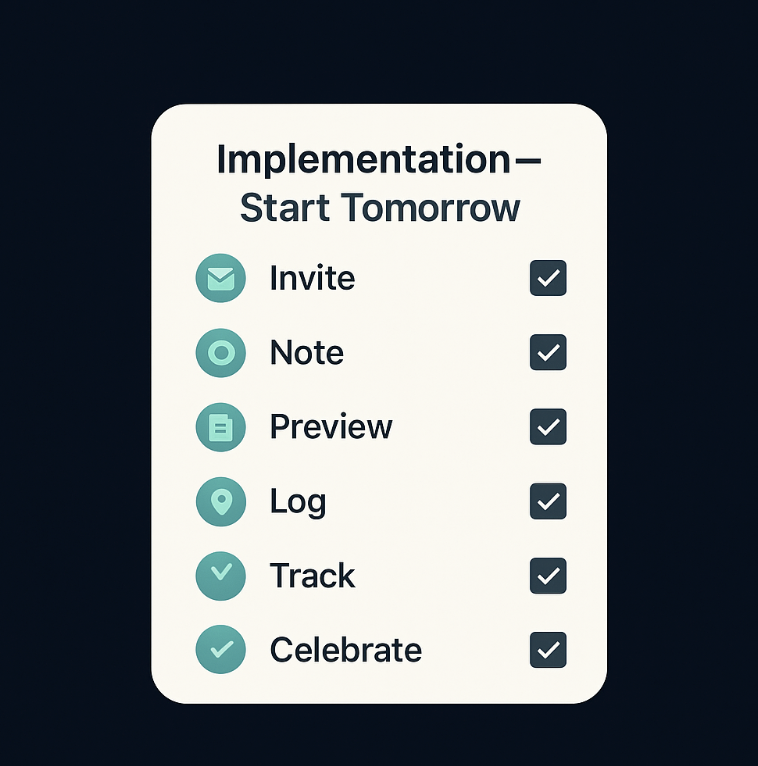

Implementation — Start Tomorrow

Start small and keep it light. Add a 10-minute “What could break?” slot to your next design review. Keep the Definition of Done in the same file as the mock-ups so there’s one source of truth. Create preview URLs for each pull request and run a tiny smoke path (landing → CTA → form submit). Keep a one-line prevented-defect log in Notion and review it monthly. Track reopened tickets per sprint and share the trend in retro. And celebrate boring releases — make the invisible win visible.

Conclusion

Integrated QA is not about finding every defect — it’s about ensuring many never occur in the first place.

By embedding quality checks into early stages, it replaces last-minute firefighting with predictable, low-stress delivery. This approach shifts QA from a reactive gatekeeper role to a proactive enabler of consistent outcomes. When QA joins while ideas are still taking shape, teams ship faster, protect focus, and preserve the rare luxury of a launch without surprises.

FAQ

Will early QA slow us down?

It actually speeds things up — small adjustments early prevent big delays later.

What if our team is small?

Smaller teams feel the impact faster — with fewer people, every mistake takes more time and focus away from the real work.

Isn’t automation costly to maintain?

Not as costly as hot-fixes during a campaign. Begin with one critical smoke path.

Aug 22, 2025