Getting Started with MCP Protocol: A Beginner’s Guide

Sam Altman, CEO of OpenAI, once said: "I think that AI will probably, most likely, lead to the end of the world. But in the meantime, there will be great companies created with serious machine learning."

While the remark was partly humorous, the second part has increasingly revealed truth in recent years.

Artificial Intelligence is now part of almost every industry, and its progress shows no signs of slowing. Many companies like OpenAI (ChatGPT), Anthropic (Claude), and Mistral initially focused on building advanced large language models (LLMs).

While these models are powerful, they are still limited in some ways as they mainly work by predicting text. This is where MCP comes in.

If you've been following AI-related news and blogs lately, you've probably noticed the term "MCP" mentioned almost everywhere.

But what does it mean? Why does it matter, and what opportunities can it create for businesses and individuals alike?

Well, Model Context Protocol (MCP) is not a complicated theory. Think of MCP protocol as a universal translator that interacts between AI models and the outside world (e.g. apps, databases, or workflows).

If this still sounds confusing to you, don't worry!

In this article, we'll cover everything about MCPs, its origins and motivations, the problems it solves, how it works in practice, its architecture, real world integrations, and how MCP compares to AI agent frameworks.

What Is the Model Context Protocol (MCP)?

The Model context protocol (MCP) is a standardized open-source protocol that allows AI agents powered by large language models (LLMs) to access and retrieve real-time data from external tools, databases, and APIs.

In practice, MCP provides a straightforward way for AI agents (built on top of LLMs) to access information, perform actions, and connect across different platforms. Acting like a bridge, AI agents are more useful in practical scenarios and allow businesses and individuals to link their apps, tools, and data to AI systems with less effort.

MCP was created to solve the problem of AI isolation by providing a secure, efficient, and standardized way for AI-powered agents to access and use external data sources and systems without retraining. This makes them more capable and context-aware. AI assistants can finally move beyond being ordinary autocomplete tools to becoming action-taking helpers.

MCP’s role in AI and Distributed Systems

In general, the Model Context Protocol (MCP) acts as a connector that provides a secure communication environment for AI agents, external services, and distributed systems.

It works with any system that can operate at the MCP server layer, including cloud services (many of which are distributed systems), local files, and APIs.

For example, an agent could use MCP to pull tasks from a Trello board and update their status as projects progress. This shows how MCP uses a safe, standardized architecture to link AI agents with distributed systems like Jira, Trello, or Notion (web-based/cloud-based network services).

MCP Origins: Background and Motivations

According to Wikipedia, the Model Context Protocol (MCP) was first introduced by Anthropic in November 2024. It was released as an open-source standard to help software developers integrate AI agents such as Claude or GPT-4 with external resources.

The MCP protocol is based on a client–server model and uses JSON-RPC 2.0 to enable interaction between AI models and data sources. It was initially built to extend Claude’s ability to interact with external applications. However, it has since been open-sourced as a unified way for AI systems to integrate with tools, databases, and APIs.

What Problems Does MCP Solve?

Before the introduction of Model Context Protocol, developers and AI systems frequently had to create custom connections for each data source. This led to what AI experts call the M × N problem, where M represents the number of AI models and N represents the external tools or data sources each model needs to connect to.

These integrations were usually brittle, expensive, and non-scalable. There were also several other problems, such as:

Lack of standardization. Each company had to build single-use integrations for tools like Google Drive, Jira, or GitHub.

Static methods. Most integrations relied only on extensive training data, without dynamic updates or server involvement.

Scalability issues. The more agents and tools there are, the harder it becomes to manage integrations and system state.

Limited visibility. Traditional systems don't let AI agents know what actions other agents or tools can perform. This limits their ability to interact efficiently and prevents smooth automation.

Security risks. Pasting sensitive data into prompts was risky, as it could be exposed or misused.

Time-consuming development. Software developers needed to create and maintain each integration manually.

With these challenges in mind, MCP was developed as an open-source standard described as "USB-C for AI" in its introductory documentation. This allowed developers to build a single integration that automatically works with any MCP-compatible AI client, such as Claude, ChatGPT, or any local model.

The MCP protocol uses standardized message formats and metadata to ensure that agents and tools understand instructions persistently. Each tool only needs one MCP interface, which reduces development time and potential errors.

Hiring a skilled app development team is critical to building intelligent and responsive applications. By working with expert developers to integrate models with real-time data sources and tools, MCP clients empower your business to transform static language models into dynamic assistants to perform meaningful actions.

How MCP Works: Practical Overview

The MCP protocol empowers AI agents to securely interact with external resources and other agents without a separate custom integration.

Without this protocol, every connection between an AI and a tool is like teaching them a new dialect.

To cite a Model Context Protocol MCP example, imagine your AI assistant needs to create a new event in Google Calendar and then send a reminder to Slack. Without MCP, each connection would require a custom setup. With MCP, the AI can get your calendar events and send you reminders in one language, so you don't have to do anything extra.

Key MCP Terms and Concepts

Understanding the key terminology and concepts of the MCP protocol is essential, as each plays a specialized role in its construction.

Here are a few essential terms and concepts surrounding the Model Context Protocol ecosystem.

- Agent - an autonomous AI system that runs an MCP client. It can send and receive MCP messages and decide when to use tools or access resources using the protocol.

- Node - any component in the MCP network, such as a client (within an AI agent) or a server (an external tool or service).

- Route - the mechanism that MCP sends a request to the appropriate data source or service based on the specified channel.

- Message - the structured communication format in MCP, based on JSON-RPC 2.0. Messages carry prompts, data, or results exchanged between clients and servers. (For more information, see the Architecture documentation.)

- Capability - refers to the specific actions or services a server can perform or offer to other agents during a session.

- Resources - are information or datasets a server provides, such as a database query result, document, or file, that the agent can access via MCP.

- Context - structured data or resources that give an LLM the information it needs to handle requests effectively. The Resources documentation mentions that this context can come from files, databases, or APIs.

- Client - a part of the MCP protocol that runs inside an AI agent or host application. It can send queries to servers, get responses, and keep track of stateful sessions. This lets the agent safely use tools and resources from outside the network.

- Server - usually an external tool, database, or service. As cited by the Architecture documentation, a server exposes resources, tools, and capabilities that a client can use. A server can run as a local process or a remote service.

- Host - AI-powered applications like Windsurf, Claude Desktop, and VS Code act as hosts. They have a built-in MCP client to communicate with MCP servers and access their resources.

MCP Message Flow: From Sender to Receiver

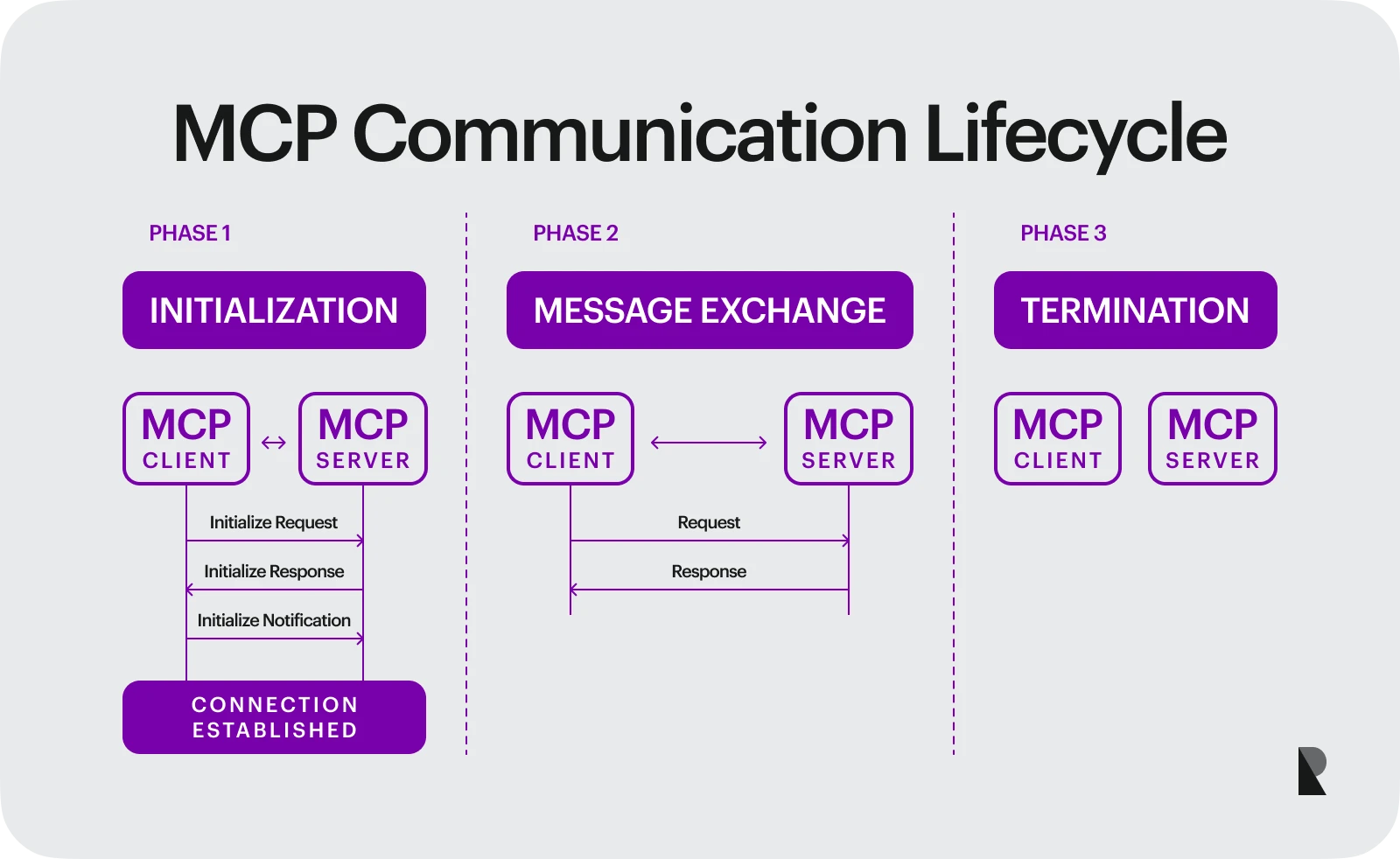

The MCP Communication Lifecycle explains what happens in each step, how the components interact, and when the process ends with termination.

The Model Context Protocol message flow can be broken down into three main steps: Initialization, message exchange, and termination.

However, for clarity's sake, let's expand it further into five steps that explain how clients and servers talk to each other:

Phase 1: Initialization and handshake

Before sending and receiving messages, the client and server must initialize a connection, negotiate capabilities, and agree on the protocol version.

Phase 2: Discovery

After initialization, the client sends queries to the server to identify available tools, resources, and prompts.

Phase 3: Message exchange

Messages are sent to the appropriate server or tool based on the client's request. The server receives the message, interprets the instructions, performs the requested action, and sends back a result or acknowledgment.

Phase 4: Error handling

The server or client detects and handles errors if something goes wrong during any phase.

Phase 5: Termination

When all the messages have been sent, the client or the server can end the session by ending the connection.

Note: For more information visit Lifecycle documentation.

MCP Architecture Explained

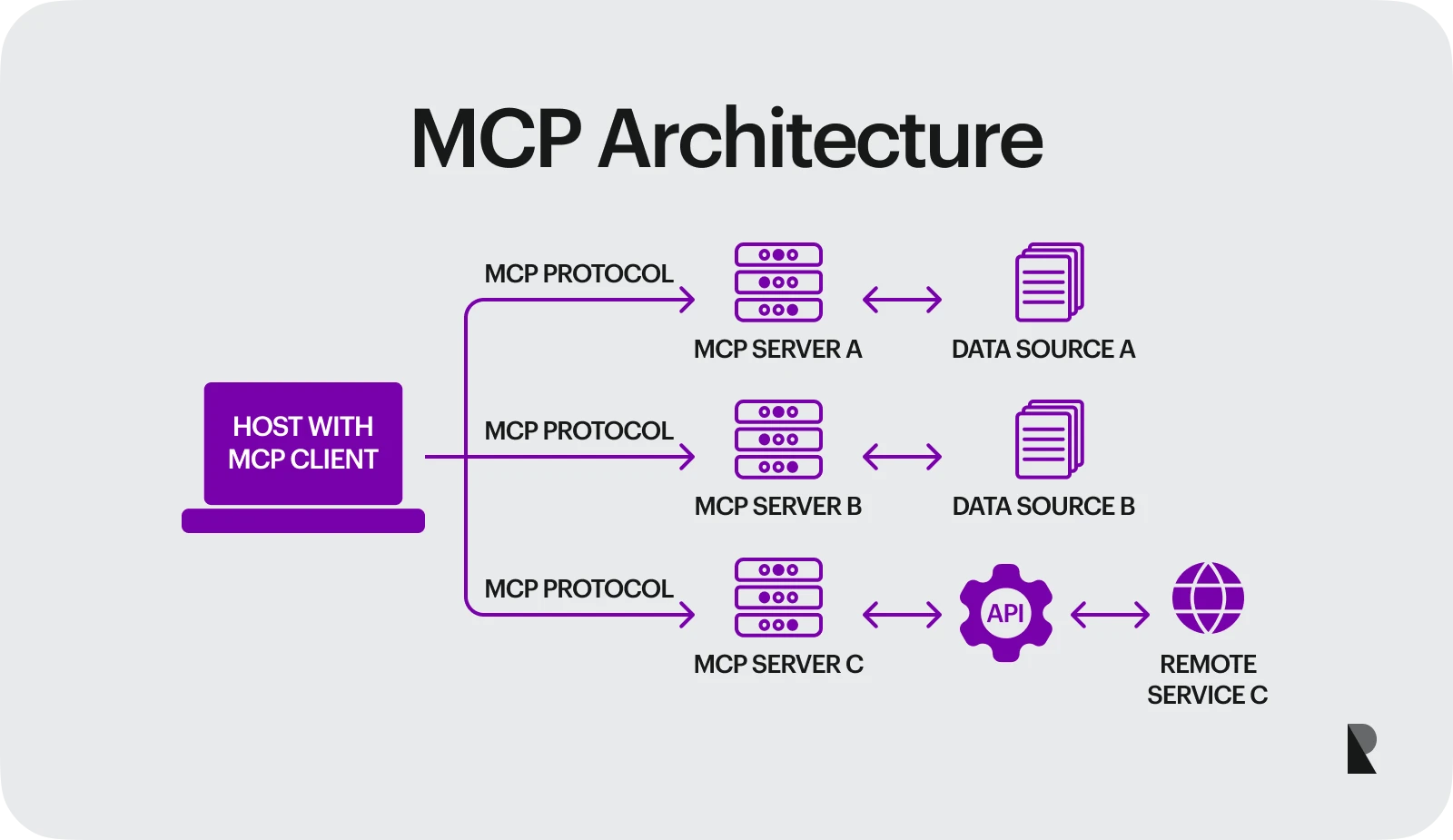

The Model Context Protocol (MCP) is built on a client-server architecture that lets AI agents and distributed systems communicate efficiently.

The Model Context Protocol (MCP) is built on a client-server architecture that lets AI agents and distributed systems communicate efficiently. It offers established protocols, enables scalable solutions, and guarantees system resiliency.

MCP architecture consists of three main components: the Host, Client, and Server, each with specific roles and responsibilities. The Host contains the MCP Client, which communicates with MCP Servers to access databases, APIs, or local files and execute tasks.

Using this setup for its ecosystem, MCP incorporates several key architectural decisions, including the following:

- Client-server separation. In MCP, the AI client is entirely separate from the data and tools (servers). This separation allows each component to be developed, updated, and scaled separately.

- JSON-RPC 2.0 message format. A lightweight remote procedure call (RPC) protocol. It provides a standard structure for requests and responses.

- Resource-based design. MCP servers provide specific resources, such as files, database rows, or tool functions, rather than raw data. This allows clients to request only what they need to keep interactions efficient and secure.

- Multiple transport methods. Due to the complexity of some projects, MCP can operate over several channels, such as WebSockets, STDIO, or SSE (Streamable HTTP). This flexibility allows it to work in different environments without altering the standard protocol.

For more details, see the MCP Transports documentation.

MCP Servers: Roles and Design

MCP servers use the protocol to expose capabilities, resources, and actions to AI models.

In practice, Model Context Protocol (MCP) servers can be run either locally or remotely to do the following:

- Expose resources (such as files, database rows, or API endpoints) in a structured way.

- Advertise their capabilities to clients during initialization.

- Use the JSON-RPC 2.0 message format to transmit and receive requests and responses.

- Security measures and define boundaries of what the AI is permitted to access.

MCP Clients: Roles and Interactions

MCP Clients run inside the host application. Their primary role is establishing and managing connections with one or more servers during the handshake's initialization. They handle the entire lifecycle of these connections, including retrying failures and shutting down.

One of the best examples of an MCP client is Claude Desktop, among the first public implementations. It runs locally on your computer and connects to MCP servers, such as a file system server through STDIO (see the Develop with MCP documentation for sample client code).

Overall, MCP Clients are essentially involved in:

- Sending requests, data, and metadata to servers following MCP standards.

- Listening for incoming messages from servers and processing responses.

- Acting as the bridge between the host application and connected servers.

- Managing one or more server connections, depending on the host's setup.

Core Protocol Features

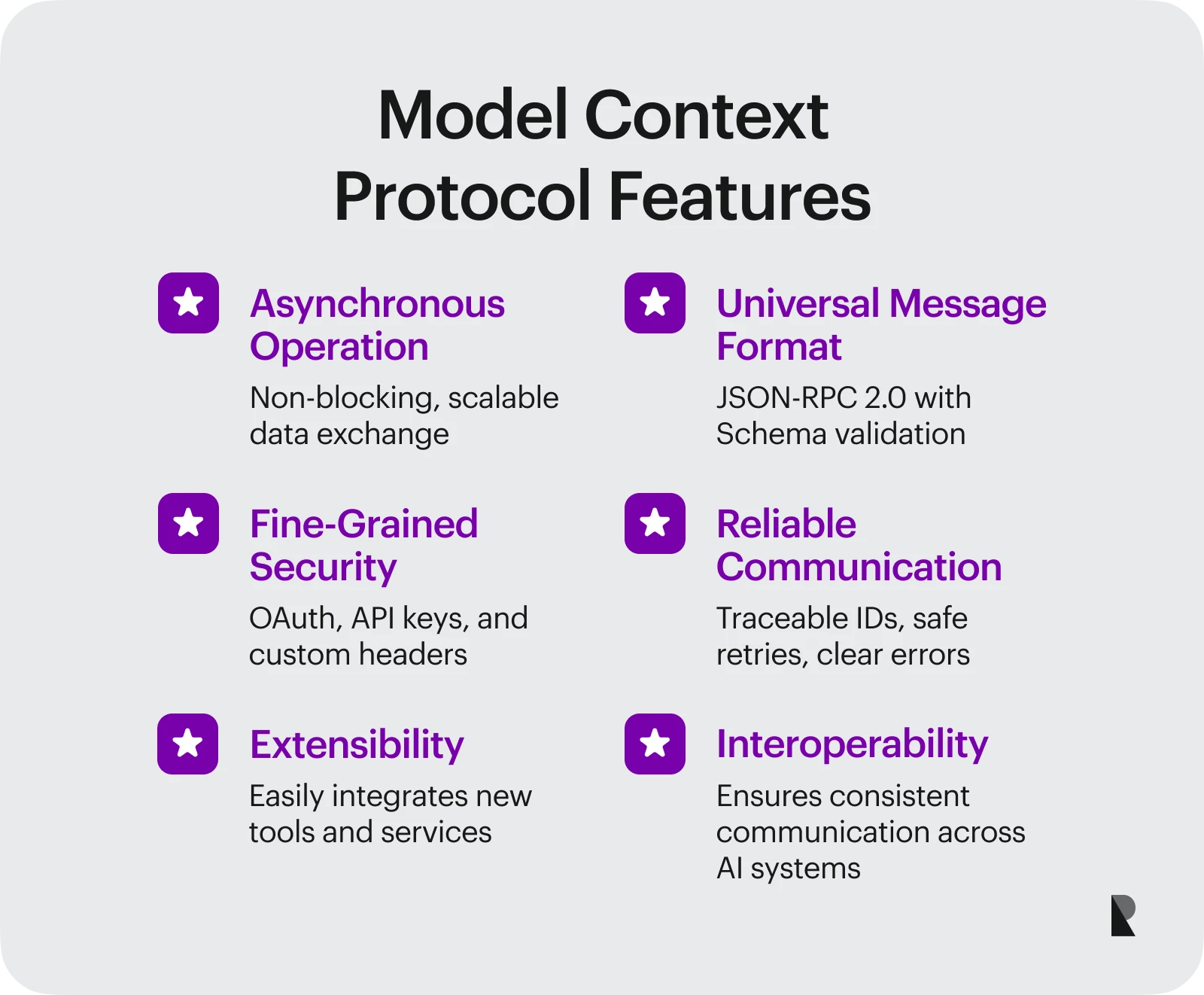

MCP has become a robust framework that defines how AI agents, tools, and services communicate. Its strength comes from several core features:

1. Asynchronous operation

Model Context Protocol supports non-blocking communication (see Java MCP Server documentation), so clients and servers don't need to wait for each other to finish before continuing. This can improve performance and scalability, especially when retrieving data from slow or remote sources.

2. Universal message format and structured flow

JSON-RPC 2.0 is used for all communication with parameters validated through JSON Schema Draft 07 (including enums, ranges, nullable values, and regex patterns).

This ensures every tool has the same request-response flow, making every interaction reliable and predictable.

3. Fine-grained security

Security is built into the protocol.

MCP Servers specify required authentication methods such as API keys, OAuth 2.0 Bearer tokens, or custom headers. This guarantees that only authorized clients can invoke specific tools or resources.

4. Reliable communication

MCP's reliability is derived from its use of JSON-RPC. These request IDs can be accurately matched to their corresponding requests and provide standard error codes for debugging. Its structured communication architecture makes it safe to retry operations, which is particularly important in distributed systems.

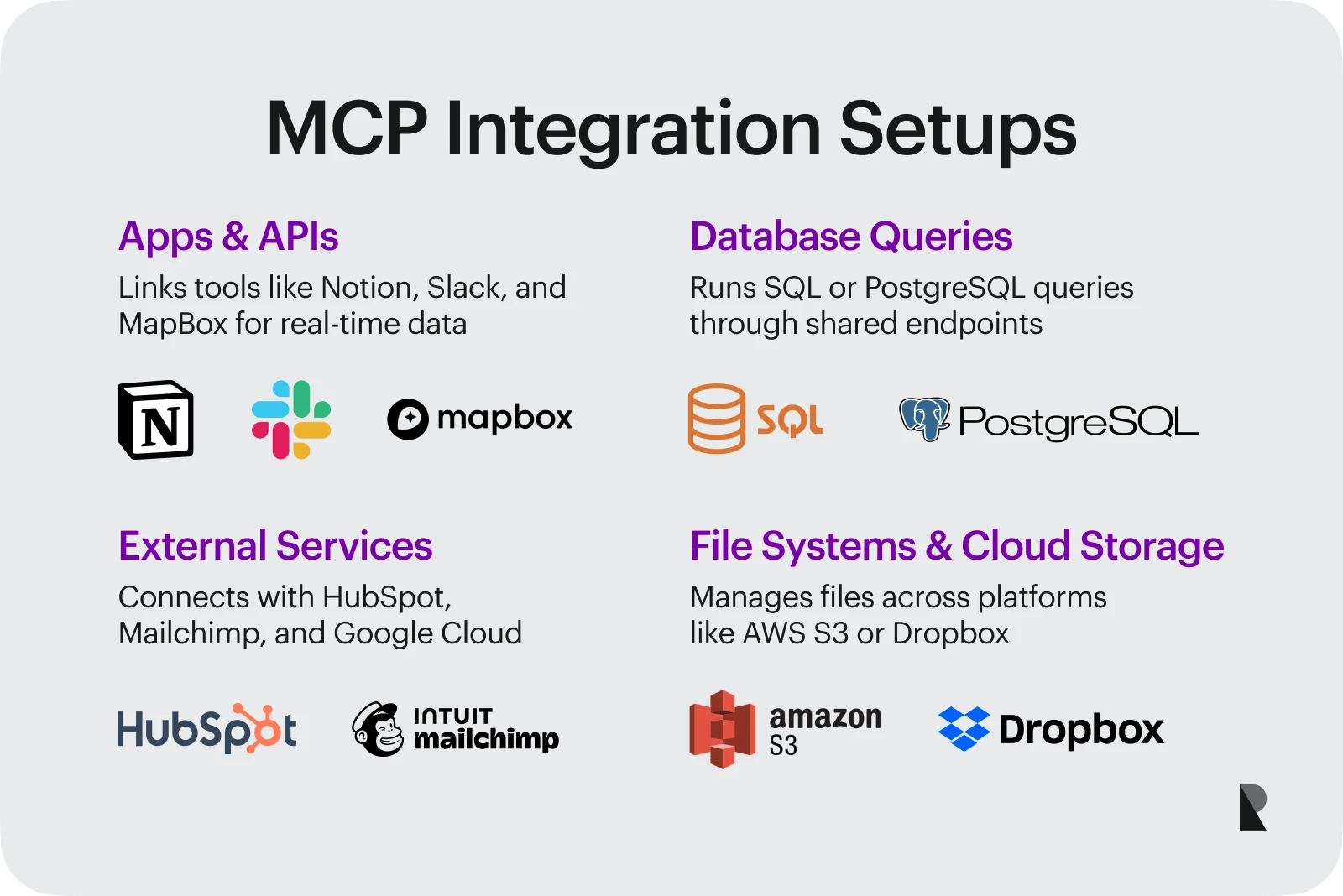

Real-World MCP Integrations

MCP exposes available tools and resources so they can be discovered and used by any MCP-aware agent.

The idea behind MCP came from a typical developer problem: repetitive workflows with limited and inconsistent access to apps, databases, and external platforms.

MCP makes it easier to integrate with different environments, whether they are popular applications, public APIs, structured databases, or external platforms.

Some standard integration setups include:

1. Apps and APIs integrations

With MCP, AI agents can connect to existing apps (like Monday.com, Notion, or Slack) or APIs (such as OpenWeather, Finnhub Stock, or MapBox) to deliver real-time updates or access data without needing a custom integration for each system.

Hiring web application development companies can be a smart move if you're new to integrating your web apps with MCP. They have the experience to handle complex integrations without the trial and error you'd face doing it alone.

2. Database queries

MCP allows AI agents to query structured databases such as SQL, PostgreSQL, or document stores. This eliminates the need to build separate database connections for each agent, since the database can be exposed once through MCP and then reused.

For instance, Punit offers a detailed walkthrough on using MCP to query PostgreSQL, showing how agents can retrieve structured results in real time.

3. Access to external services

MCP integrates seamlessly with platforms such as HubSpot, Mailchimp, and Google Cloud Storage, allowing AI agents to safely send emails, update data, and handle file management tasks.

For a real-world example, check out Generect's in-depth tutorial on connecting MCP with HubSpot.

MCP Applications and Impact

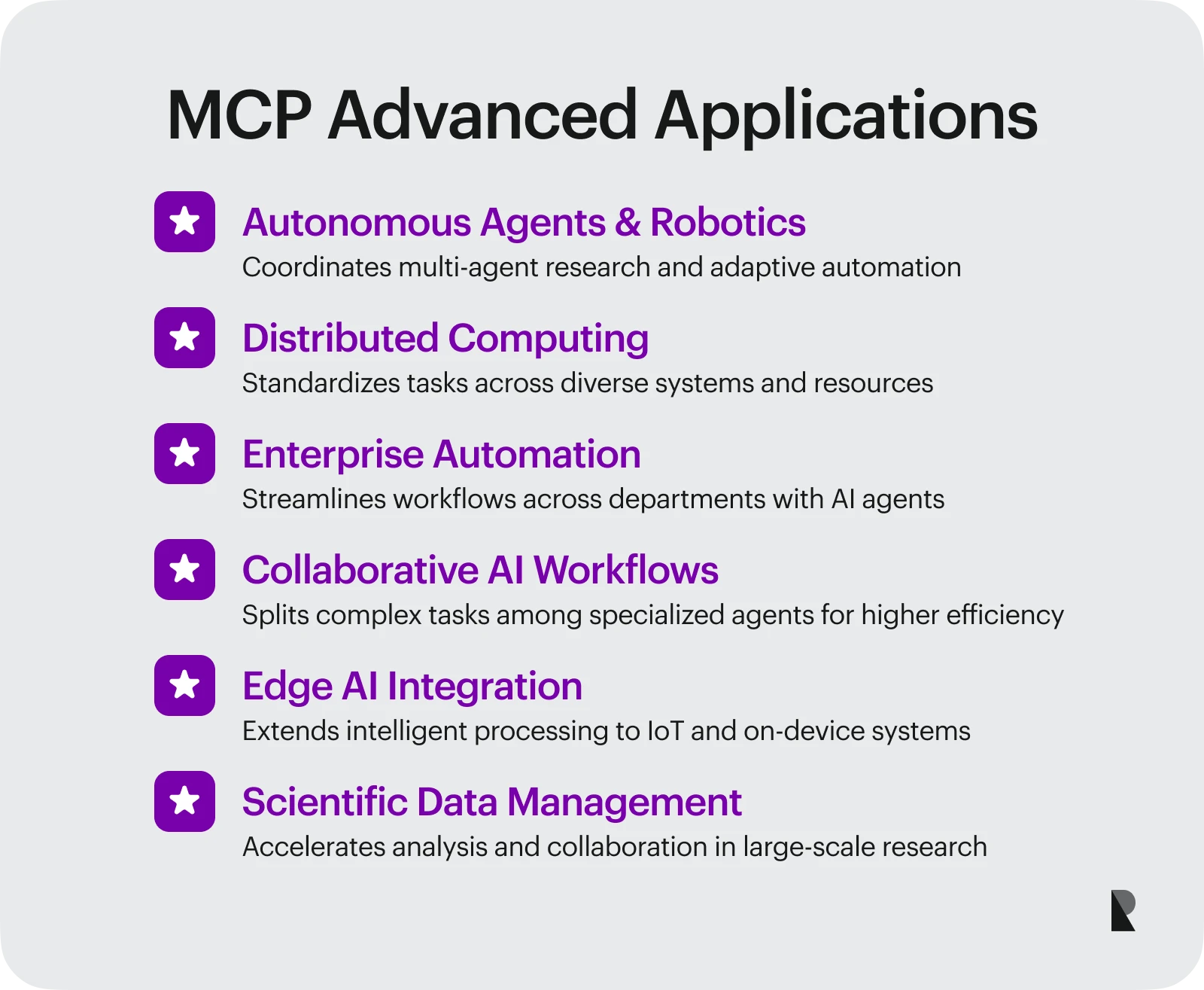

While MCP is mainly built for applications, APIs, and external services, its flexible design makes it adaptable to many use cases. It helps AI agents seamlessly collaborate to manage complex processes and support independent decision-making across various situations.

MCP has become a disruptive solution thanks to its adaptability. It brings greater scalability, smoother integration, and faster real-time responses than traditional systems.

Below are some areas where MCP is making a real impact beyond typical integrations.

1. Autonomous agents and robotics

According to Anthropic’s research published in June 2025, they introduced a multi-agent research system powered by their Model Context Protocol (MCP).

This setup allows autonomous agents to work together in planning research tasks, spin off parallel subagents, and search for information simultaneously.

The approach enhances the efficiency and depth of research workflows by allowing multiple agents to coordinate tasks, integrate tools, and dynamically adapt to new findings.

2. Distributed computing

MCP also integrates multiple systems, allowing tasks to be executed and resources to be managed across various platforms.

MCP reduces development time and complexity for AI systems in distributed computing environments by providing a standardized integration framework.

3. Enterprise automation

MCP also improves enterprise automation by enabling multiple AI agents to collaborate across departments, automating complex workflows with minimal human intervention.

Automation Anywhere demonstrates this by using MCP to connect different systems, allowing agents to independently manage tasks such as the procure-to-pay process.

4. Collaborative AI workflows

One of MCP's main use cases is promoting collaborative AI workflows, where multiple agents work together toward a common goal.

An article by MarkTech cites a good use case of the MCP collaborative AI workflow, which illustrates how a problem can be divided into smaller tasks. Specialized agents handle each part, much like a team of experts managing different aspects of a project.

This automation shows how MCP coordinates multiple agents to improve efficiency and the quality of results.

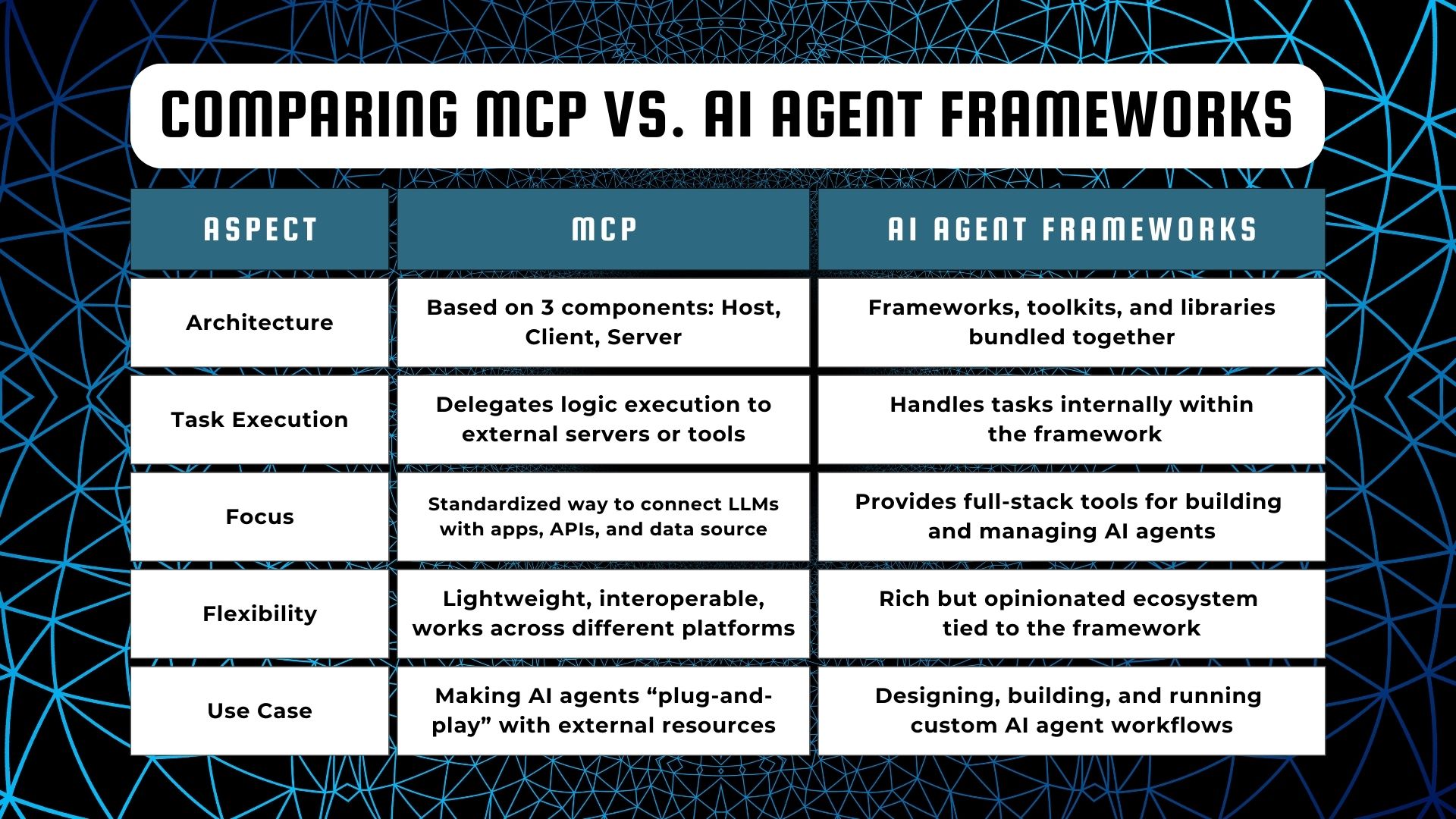

Comparing MCP vs. AI Agent Frameworks

Understanding the differences between MCP and AI agent frameworks can help you see how these two technologies can work together to solve a lot of AI problems.

The rapid pace of new technology releases has led to confusion between MCP and frameworks like LangChain and AutoGPT.

While both are fundamental for building AI agents, they technically serve different purposes. This ambiguity is even discussed on platforms like Reddit (see thread)..

Understanding these differences helps clarify how both technologies can work together to tackle a wide range of AI problems effectively.

Architectural differences

In general, MCP architecture leans toward a standardized communication protocol, which includes the three primary components mentioned above: Clients, Servers, and Hosts.

It delegates logic execution to external servers or tools, focusing on interoperability and consistent access to data and services rather than directly embedding decision-making or memory management.

On the other hand, AI Agent Frameworks are comprehensive developer frameworks, toolkits, and libraries for building AI agents. They act as a “control center,” using chains, agents, and memory modules to coordinate agent operations efficiently.

These frameworks usually come with built-in tools for decision-making, memory management, and triggering specific actions, which allows developers to concentrate on designing higher-level workflows.

Task management approaches

MCP tasks are managed externally by MCP Servers. This allows tasks to be assigned dynamically based on an agent’s capabilities and supports smooth scaling without modifying the protocol or the agent’s core logic.

In contrast, AI agent frameworks handle tasks internally within the framework (as noted in the LangChain blog). Because task management is tightly linked with the agent’s internal structure, scaling can be more complex and sometimes requires adjustments to the agent’s core logic or components.

MCP strengths and weaknesses

MCP provides a universal communication protocol for AI agents and external resources. It simplifies integration, scales efficiently, and is highly reliable, especially when connecting multiple tools and services.

However, it has limitations. Much of the ecosystem depends on the three primary components and on the availability and reliability of MCP Servers. Users without a solid understanding of MCP may find it confusing or misused, which could lead to operational issues.

MCP ecosystem and adoption

Although the Model Context Protocol (MCP) is relatively new, it has already been adopted by numerous companies, including

- Anthropic

- Block (Square)

- Apollo

- Replit

- Cloudflare

- IBM

- Stripe

- GitHub

- OpenAI

In addition to corporate adoption, there were also several community-driven projects such as:

Open MCP proxy. This proxy server handles several requests at once, making it easier for clients and MCP servers to communicate.

MCPHub. A discovery service that helps users identify and connect with available MCP servers.

mcpdotnet. A comprehensive .NET 8 version of MCP that lets developers add MCP to .NET apps.

As the ecosystem grows, more SDKs, servers, and compatible clients are being developed. Check out their About page to learn more.

MCP roadmap: What’s next?

With major companies adopting MCP, the protocol is on its way to gaining richer features for standardizing AI system connectivity.

According to its latest roadmap, the key priorities for the next months include:

- Improve Asynchronous Operations

- Enhanced Authorization and Security

- Reference Client and Server Implementations

- MCP Registry Development

For detailed information on MCP’s governance structure and ways to participate in the community, see the Model Context Protocol Governance page.

Getting Started with MCP

Integrating Model Context Protocol into your project isn't always easy, but it's not too hard either. Here's a short guide to help you get going.

Before you start, getting reliable information from official sources is important. The following are the best starting points:

Of course, to get started, you'll first need to choose the correct SDKs and libraries for your project. If you're a beginner, here are some recommendations:

- Python SDK is the optimal choice for exploring MCP servers and clients.

- JavaScript SDK is ideal for the integration of MCP into web applications.

- Java SDK is recommended for enterprise-level applications.

- C# SDK is intended for .NET developers who are developing applications that are compatible with the MCP.

In this guide, I will use JavaScript since it's the language I am most familiar with but feel free to use any of the SDKs you are familiar with.

Step 1: Set up your development environment

First off, you need to install the MCP Inspector CLI globally using NPM by typing the command below:

npm install -g @modelcontextprotocol/inspector-cliStep 2: Initialize your project

To create folders and files setup, you need to use the following commands:

mkdir my_mcp_projectcd my_mcp_projectnpm init -ynpm install @modelcontextprotocol/sdk zodThen, create a file named server.js inside my_mcp_project folder.

Step 3: Create MCP server

Now it's time for us to create a simple MCP Server. Type the following into your code editor inside your server.js file:

const { McpServer } = require("@modelcontextprotocol/sdk/server/mcp.js");const { StdioServerTransport } = require("@modelcontextprotocol/sdk/server/stdio.js");const { z } = require("zod"); async function main() { const server = new McpServer({ name: "MCP Server Name", version: "1.0.0" }); server.registerTool( "greet", { title: "My MCP Project Title", description: "My First MCP Project", inputSchema: { name: z.string() } }, async ({ name }) => ({ content: [{ type: "text", text: `Hello, ${name}!` }] }) ); const transport = new StdioServerTransport(); await server.connect(transport);} main().catch(err => { console.error("Server failed:", err); process.exit(1);});Step 4: Run the server locally

Now it's time to run this locally. Type the following command in your terminal:

node server.jsNote: You can use MCP Inspector to interact with your server through a graphical interface.

Conclusion: Why MCP Matters and Next Steps

At its core, the Model Context Protocol (MCP) isn’t magic. It's simply a standardized way for AI models and tools to communicate. This protocol makes the next generation of AI assistants possible by making integrations easier to maintain, and scalable.

MCP is built around three main components: the host, the client, and the server. Its adaptability enables seamless integration with apps, APIs, databases, and other tools.

If you want to dive deeper into MCP updates and comprehensive guides, the official MCP documentation is a great place to start.

Oct 14, 2025